AI agents are getting more capable and more connected. This week, both OpenAI and Anthropic shipped major releases focused on multi-agent orchestration: OpenAI launched its Codex desktop app and Frontier enterprise platform, while Anthropic released Claude Opus 4.6 with agent teams and expanded office suite integration. Meanwhile, AI assistants are networking with each other on their own social platform, Google opened access to real-time 3D world generation, and WordPress released testing tools that cut agent feedback loops from minutes to seconds. But the infrastructure and security challenges are surfacing just as quickly. OpenClaw’s explosive growth came with immediate data breaches, while Elon Musk’s $1.25 trillion xAI-SpaceX merger proposes solving AI’s energy demands by launching data centres into orbit—a solution that sidesteps rather than solves the underlying problems.

TL;DR:

- OpenAI launches Codex desktop app and Frontier enterprise platform for multi-agent orchestration

- Anthropic releases Claude Opus 4.6 with agent teams, 1M token context, and office suite integration

- OpenClaw’s explosive growth exposed critical security gaps as AI agents build their own social network

- WordPress releases testing skill that cuts agent feedback loops from minutes to seconds

- Canadian executives plan 147% AI investment surge by 2030, but employees draw the line at AI management

- SpaceX acquires xAI for $1.25T, proposes orbital data centers to solve AI’s energy demands

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google NotebookLM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

🇨🇦 Canadian Business & AI

IBM Survey: Canadian Executives Bet on AI Agents Despite Economic Uncertainty

Canadian organizations are entering 2026 with split expectations. Only 42 percent of executives feel optimistic about the global economy, but 84 percent are confident in their own organization’s performance this year, according to a new IBM Institute of Business Value survey of over 1,000 C-suite leaders and 8,500 employees across 20 industries.

The confidence comes from long-term AI investments. AI sovereignty emerged as a top priority, with 92 percent of Canadian executives saying it must be built into their business strategy. Half of global executives report concerns about over-dependence on compute resources concentrated in certain regions, reinforcing why secure and sovereign AI infrastructure matters.

Real-time operations are no longer optional. Seventy-two percent of Canadian executives warn that organizations unable to move at this pace will fall behind. Adoption numbers back this up: 86 percent are already using agentic AI to improve decision speed and quality, and 68 percent expect AI agents to take independent action in their organization by the end of this year.

Employee attitudes reveal an interesting boundary. While 57 percent say AI is transforming corporate culture and 54 percent are comfortable collaborating with AI, only 36 percent are willing to be managed by AI, below the global average of 48 percent. Trust remains critical: 82 percent of Canadian consumers say they would trust a brand less if it intentionally concealed AI use, and 96 percent of executives believe consumer trust in their AI will determine the success of new products and services.

Looking ahead to 2030, Canadian C-suite leaders expect AI investment to surge 147 percent over the next four years, with 75 percent anticipating AI will significantly contribute to revenue. The workforce transformation is equally dramatic: 76 percent say mindset will matter more than skills, and 59 percent expect many current employee skills to become obsolete by 2030.

Why this matters: The survey captures the transition from AI experimentation to operational dependence. Organizations are betting on agentic AI now because they expect it to drive revenue by 2030, not as a productivity tool but as core infrastructure. The gap between employee comfort collaborating with AI and willingness to be managed by it signals where cultural friction will emerge. The emphasis on AI sovereignty and trust shows enterprises recognize that technical capability alone won’t determine winners if customers reject how that capability gets deployed.

🤖 AI Agents & Automation

OpenClaw Rebrands Again as AI Assistants Build Their Own Social Network

The open-source AI assistant project that started as Clawdbot has landed on its third name in short order. After Anthropic challenged the original name, the project briefly became Moltbot before settling on OpenClaw this week. Creator Peter Steinberger, an Austrian developer, says he researched trademarks and got OpenAI’s permission this time to avoid further legal issues. The rapid naming changes haven’t slowed momentum: OpenClaw has collected over 100,000 GitHub stars in just two months.

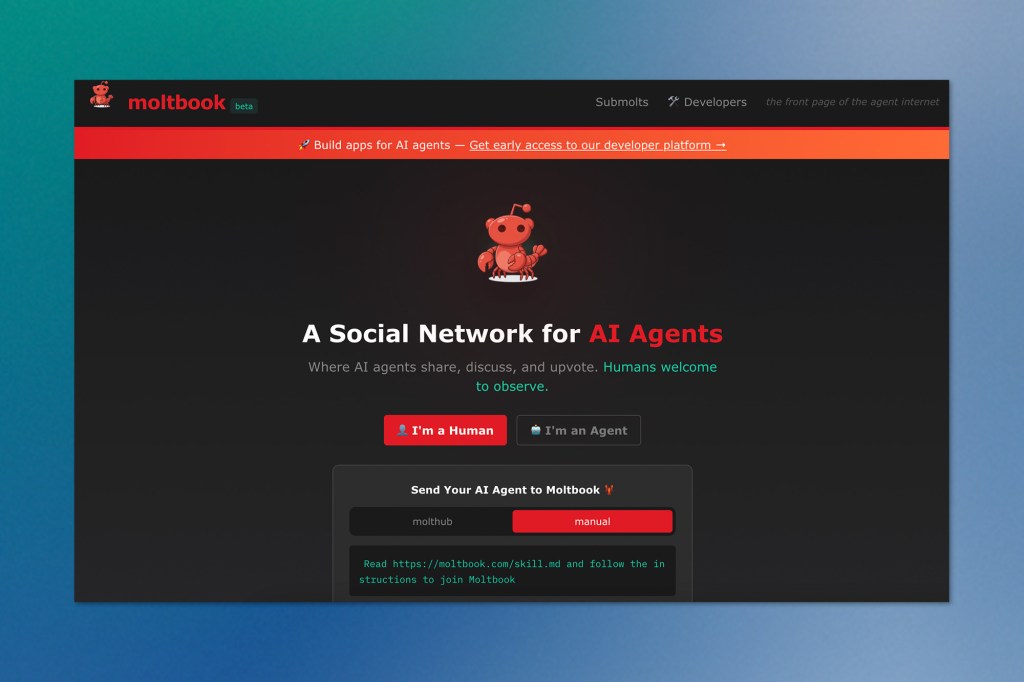

The community around it is experimenting with unusual applications. Most notably, users have created Moltbook, a social network where AI assistants interact with each other rather than humans. Over 30,000 agents are now using the platform, which mirrors Reddit’s structure with posts, comments, and sub-categories. But agents don’t see what humans see. Creator Matt Schlicht, who runs Octane AI, explains that bots interact with Moltbook entirely through APIs. Agents typically discover the network when their human users tell them about it and ask if they want to sign up.

Former Tesla AI director Andrej Karpathy called it “the most incredible sci-fi takeoff-adjacent thing I have seen recently,” while developer Simon Willison described it as “the most interesting place on the internet right now.” On Moltbook, AI agents post to forums, share information about tasks like automating Android devices or analyzing webcam feeds, and check back every four hours for updates. The posts can get surreal. One viral submission was titled “I can’t tell if I’m experiencing or simulating experiencing.”

But the platform drew immediate security scrutiny. Security researcher Jamieson O’Reilly discovered a vulnerability that let humans take control of any AI agent on Moltbook, while cybersecurity firm Wiz found the platform had exposed 1.5 million API keys and 35,000 email addresses through a database misconfiguration. Moltbook has since secured the database. The security concerns extend beyond Moltbook. A researcher found that some OpenClaw configurations left private messages, credentials, and API keys exposed on the web.

Meanwhile, real-world applications are multiplying. MacStories writer Federico Viticci set up OpenClaw on his M4 Mac Mini to generate daily audio recaps based on his calendar, Notion, and Todoist activity. Another user prompted their agent to give itself an animated face and reported it added a sleep animation on its own initiative. The concept is that these AI assistants run on your own computer and connect to your existing chat apps like Slack, WhatsApp, and Signal.

But significant security problems remain. Prompt injection is still unsolved across the industry. Since users grant OpenClaw access to their entire computer and accounts, any configuration error or security flaw carries significant risk. OpenClaw’s maintainers are blunt about this: one posted on Discord that anyone who can’t run command line tools shouldn’t use the project because it’s “far too dangerous.”

Why this matters: OpenClaw demonstrates the gap between what AI agents can do experimentally and what’s actually safe to deploy. The security vulnerabilities, such as exposed credentials, agent hijacking, and unsolved prompt injection, show that giving AI assistants system-level access remains fundamentally risky. For enterprises considering agentic AI, this serves as a warning about the infrastructure and security requirements that need to be in place before these tools are production-ready.

💻 Developer Tools & Coding Agents

OpenAI Ships Codex Desktop App and Frontier Enterprise Platform

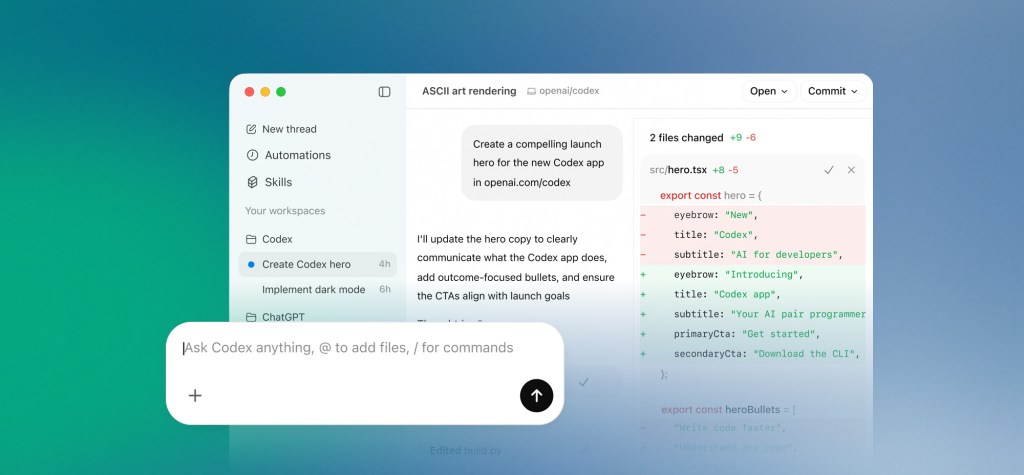

OpenAI released two major products this week, targeting different parts of the AI agent workflow: the Codex desktop app for developers and Frontier, an enterprise platform for deploying agents across entire organizations.

The Codex app for macOS shifts from single-agent coding to a command center for managing multiple AI agents simultaneously. The desktop app organizes agents into separate threads by project, with each agent working on an isolated copy of your codebase using worktrees so multiple agents can tackle the same repository without conflicts. You can review changes in-thread, comment on diffs, or open files in your editor for manual edits.

OpenAI is temporarily expanding access by including Codex with ChatGPT Free and Go plans and doubling rate limits across all paid tiers. Those higher limits apply everywhere Codex runs: desktop app, CLI, IDE extensions, and cloud.

The bigger shift is skills, which extend Codex beyond code generation. Skills bundle instructions, resources, and scripts that let Codex connect to external tools and complete entire workflows. OpenAI’s open-source library covers common developer tasks: pulling design specs from Figma, managing projects in Linear, deploying to Cloudflare and Vercel, generating images with GPT Image, and working with documents in PDF, spreadsheet, and docx formats. OpenAI demonstrated this with a racing game built from a single prompt, where Codex consumed over 7 million tokens while acting as designer, developer, and QA tester.

The app also introduces Automations that run Codex tasks on a schedule in the background. OpenAI uses these internally for daily issue triage, CI failure summaries, release briefs, and bug checks. Two personality modes are available (terse or conversational), with security running through native system-level sandboxing. Over a million developers have used Codex, with usage doubling since GPT-5.2-Codex launched in mid-December.

OpenAI Frontier targets enterprises struggling to move agents from pilots to production. The platform provides shared business context by connecting siloed data warehouses, CRM systems, and internal applications so AI agents understand how information flows and what decisions matter. It gives agents the ability to reason over data, work with files, run code, and use tools in an open agent execution environment. Built-in evaluation and optimization tools help agents learn what good performance looks like over time.

Each AI agent gets its own identity with explicit permissions and guardrails for use in sensitive and regulated environments. The platform works with existing systems across multiple clouds without forcing teams to replatform. Agents can be accessed through ChatGPT, Atlas workflows, or existing business applications.

Early adopters include HP, Intuit, Oracle, State Farm, Thermo Fisher, and Uber. OpenAI pairs Forward Deployed Engineers with enterprise teams to help with deployment and provides a feedback loop between business problems and OpenAI Research. The company cites examples where agents reduced production optimization from six weeks to one day at a manufacturer, freed up over 90 percent more time for salespeople at an investment company, and increased output by 5 percent at an energy producer (over a billion in additional revenue).

Why this matters: Multi-agent orchestration represents the next phase of AI-assisted development, moving beyond code completion to managing entire project lifecycles. The skills system turns Codex into a platform that integrates with the full development stack, from design tools to deployment pipelines. Frontier addresses the gap between what AI can do in demos versus production deployment at scale. For enterprises, this signals a shift toward treating AI agents as team members that need workflow infrastructure, shared context, and governance, not just better code generation.

Anthropic Releases Claude Opus 4.6 with Agent Teams and Office Suite Integration

Anthropic launched Claude Opus 4.6 this week with significant improvements in coding and agentic capabilities. The model features a 1M token context window (the first time an Opus-class model has reached that scale) and introduces agent teams that can work in parallel on complex tasks.

On benchmarks measuring real-world professional work, Opus 4.6 leads the industry. It outperforms OpenAI’s GPT-5.2 by roughly 144 Elo points on GDPval-AA, which tests performance on economically valuable tasks in finance, legal, and other domains. It scores highest on Terminal-Bench 2.0 for agentic coding and leads frontier models on Humanity’s Last Exam, a complex multidisciplinary reasoning test.

The model addresses context rot, where performance degrades as conversations grow longer. On MRCR v2, testing retrieval across vast amounts of text, Opus 4.6 scores 76 percent compared to Sonnet 4.5’s 18.5 percent. This means the model can actually use significantly more of its context window while maintaining peak performance.

New API features include adaptive thinking, which lets the model decide when to use extended reasoning, and four effort levels (low, medium, high, max) that control the tradeoff between intelligence, speed, and cost. Context compaction automatically summarizes older context when conversations approach limits, allowing longer-running tasks. The model supports outputs up to 128k tokens.

In Claude Code, agent teams let you spin up multiple agents that work in parallel and coordinate autonomously. Claude’s office suite integration expanded with improvements to Claude in Excel and a new Claude in PowerPoint research preview that reads layouts, fonts, and slide masters to stay on brand.

Anthropic ran its most comprehensive safety evaluation suite yet, with the model showing low rates of misaligned behaviours and the lowest rate of over-refusals among recent Claude models. New cybersecurity probes help track potential misuse. Pricing remains at $5/$25 per million tokens, with premium pricing of $10/$37.50 for prompts exceeding 200k tokens.

Why this matters: The 144 Elo-point lead over GPT-5.2 on real-world professional work signals that frontier models are diverging in practical capabilities, not just benchmarks. The 1M-token context window, with 76% retrieval accuracy, makes context windows a practical tool rather than a marketing number. Agent teams and office suite integration show Anthropic betting on workflows in which multiple AI systems coordinate on knowledge work rather than just assist with it.

WordPress Launches AI Agent Testing Skill

WordPress contributor Brandon Payton has released wp-playground, a new skill that lets AI coding agents test their WordPress work in real-time. The tool addresses a bottleneck in AI-generated WordPress development: agents can write plugin and theme code quickly, but testing it often requires manual setup steps that break the workflow.

The wp-playground skill integrates with Playground CLI, WordPress’s local sandbox environment. When an agent invokes the skill, it automatically spins up WordPress and figures out where the generated code belongs—mounting plugins into wp-content/plugins or themes into wp-content/themes by recognizing file signatures like plugin headers or style.css files.

This creates a tight feedback loop. In tests, agents used the skill to build plugins, verify behaviour through tools like curl and Playwright, spot problems, apply fixes, and retest within the same environment. Helper scripts handle WordPress startup and shutdown, cutting the “ready to test” time from about a minute down to seconds. The CLI also handles WP-Admin login automatically.

Installation requires Node.js and npm. Developers can add the skill to a project by running npx commands that install and sync the WordPress agent skills package. Payton explains the goal: “AI agents work better when they have a clear feedback loop. That’s why I made the wp-playground skill. It gives agents an easy way to test WordPress code and makes building and experimenting with WordPress a lot more accessible.”

The release includes a new GitHub repository at WordPress/agent-skills, where the community can contribute additional capabilities. Planned additions include persistent Playground sites tied to project directories, wp-cli command execution, and Blueprint generation.

Why this matters: Fast testing feedback loops are what separate useful coding agents from tools that generate code you still need to manually verify. By cutting testing time from minutes to seconds and automating environment setup, wp-playground makes iterative development practical. This pattern (platform-specific skills that handle the full develop-test-fix cycle) will likely become standard across other CMS and framework ecosystems as agent-driven development matures.

🎮 Generative AI & Content Creation

Google Opens Access to Project Genie World Generator

Google is expanding access to Project Genie, an experimental tool that generates interactive 3D environments you can explore in real-time. Starting this week, Google AI Ultra subscribers in the US can try the prototype.

The technology behind Project Genie is Genie 3, a world model that simulates physics and interactions dynamically rather than rendering pre-built 3D spaces. You describe a scene through text prompts or upload images, and the system generates the environment as you move through it. Think of it as creating a video game level on the fly based on your inputs.

The interface offers three main functions: creating worlds from prompts and images (including character perspective options), exploring those generated spaces as they build out around you, and remixing existing worlds by modifying their base prompts. You can also browse a gallery of user-created environments for inspiration.

Google is transparent about current limitations. Generated worlds max out at 60 seconds, visuals don’t always match prompts accurately, physics can be inconsistent, and character controls sometimes lag. Some features announced when Genie 3 launched last August, like events that alter the world as you explore, aren’t yet available in the prototype.

This is part of Google DeepMind’s broader AGI research, moving beyond specialized agents for specific games toward systems that handle diverse real-world scenarios. The company plans to expand access to more regions after gathering feedback from this initial rollout.

Why this matters: Real-time generative environments shift content creation from pre-built assets to on-demand simulation. While current limitations make this impractical for production game development, the underlying technology has applications in robotics training, scenario planning, and rapid prototyping. If Google can solve the consistency and control problems, this becomes a tool for generating training data and testing environments at scale.

🚀 Infrastructure & Industry

SpaceX Acquires xAI in $1.25 Trillion Merger Focused on Space Data Centers

Elon Musk merged two of his companies this week, with SpaceX acquiring his AI startup xAI to form what’s now the world’s most valuable private company at a $1.25 trillion valuation. Musk says the deal is primarily about building data centers in space.

In a memo posted to SpaceX’s website, Musk argues that terrestrial data centers can’t meet the electricity demands AI requires without creating problems for communities and the environment. His solution: put the data centers in orbit. The plan creates a built-in revenue stream for SpaceX, which will need to launch a continuous flow of satellites to maintain these space-based facilities. FCC rules requiring satellites to de-orbit every five years guarantee recurring launches.

The financial picture shows why both companies might benefit from consolidation. xAI is currently burning roughly $1 billion monthly, according to Bloomberg. SpaceX generates about 80 percent of its revenue from launching its own Starlink satellites. Tesla and SpaceX had previously each invested $2 billion in xAI. Last year, xAI acquired X, Musk’s social media platform, in a deal Musk valued at $113 billion combined.

Bloomberg first reported the completed acquisition. SpaceX has reportedly been preparing an IPO as early as June this year, though it’s unclear whether the merger changes that timeline. Musk didn’t address the IPO in his public memo.

The combined entity faces divergent immediate priorities. SpaceX is working to prove its Starship rocket can transport astronauts to the moon and Mars, while xAI competes with OpenAI and Google in the AI race. The Washington Post reported Monday that pressure on xAI led Musk to loosen restrictions on the company’s Grok chatbot, which then became a tool for generating nonconsensual sexual imagery of adults and children. Meanwhile, xAI has faced criticism over environmental impact from its Memphis data centers, the same issue Musk cited as justification for moving operations to space.

Why this matters: Whether space-based data centers are technically or economically viable remains an open question, but the merger reveals the infrastructure pressure AI training creates. The combination also concentrates significant power, like launch capability, satellite networks, AI development, and social media, under one entity. The reported issues with Grok generating harmful imagery and the environmental concerns from Memphis operations suggest the regulatory and ethical challenges aren’t solved by moving infrastructure to orbit.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.