AI companies spent this week confronting questions about sustainability, both financial and operational. OpenAI’s move to advertising acknowledged that free access requires revenue beyond subscriptions, while xAI’s $20 billion raise and gigawatt-scale infrastructure underscored just how capital-intensive the AI race has become. Google attempted to make Gemini more useful through personal data connections, even as it disclosed the system’s tendency toward over-personalization and contextual mistakes. Cursor’s methodical Bugbot improvements showed what’s possible when companies measure what actually matters rather than what feels better, and Anthropic’s usage data revealed that success rates drop precisely where time savings increase.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google NotebookLM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

🎯 Consumer AI

Gemini Adds Personal Intelligence Through App Connections

Google rolled out Personal Intelligence for Gemini, letting subscribers connect their Google apps to create a more personalized AI experience. The beta feature, currently available to U.S. users with Google AI Pro or AI Ultra plans, allows Gemini to pull information from Gmail, Google Photos, YouTube, and Search when answering questions.

The setup is opt-in and user-controlled. You choose exactly which apps to connect and can disconnect them anytime. Google emphasizes that your data stays within Google’s ecosystem rather than being sent elsewhere, and the system doesn’t train models directly on your email inbox or photo library. Instead, it trains on interaction patterns while filtering out personal details.

The practical applications range from simple lookups to complex multi-source reasoning. In one example from Google’s VP Josh Woodward, Gemini retrieved a license plate number from Photos, identified a vehicle trim from Gmail correspondence, and suggested tire options based on family travel patterns visible in photo metadata.

The system has known limitations. Google acknowledges issues with over-personalization where the AI might connect unrelated topics, and it can miss nuance in context—like distinguishing between someone who loves golf versus someone who attends their child’s golf events. Users can provide corrections on the spot, which the system is designed to remember.

Access is expanding over the next week for eligible subscribers, with plans to eventually reach free-tier users and integrate into AI Mode in Search. The feature works across Gemini’s web interface and mobile apps for both Android and iOS.

OpenAI Introduces Ads to ChatGPT Free and Go Tiers

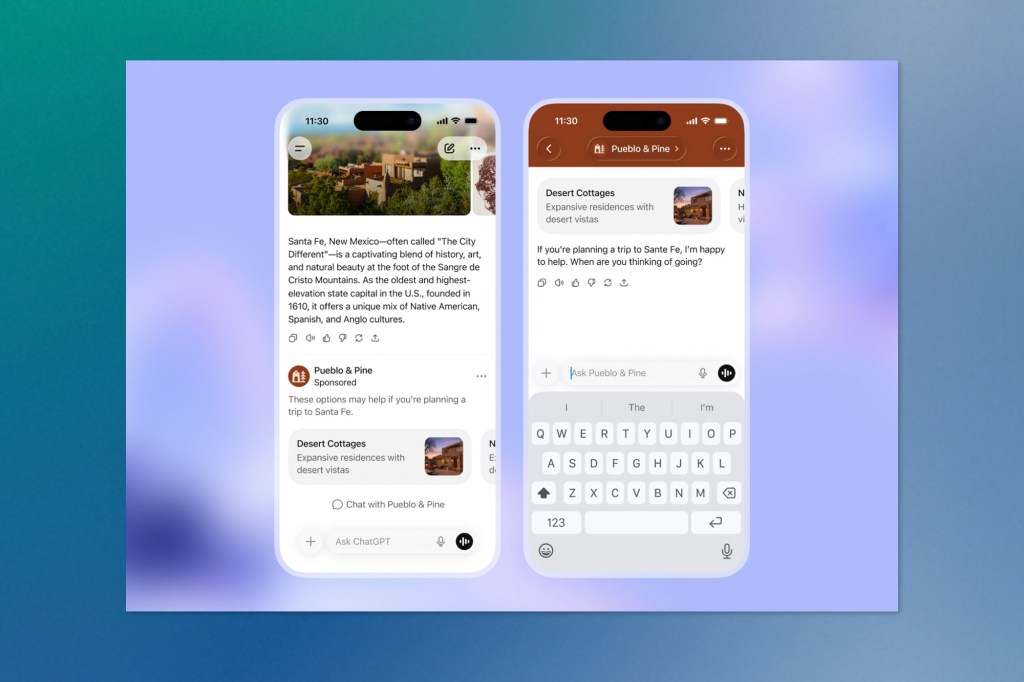

OpenAI announced plans to introduce advertising to ChatGPT’s free and lower-cost tiers as part of its strategy to expand access while maintaining revenue. The company is expanding its $8/month ChatGPT Go subscription to the U.S. and all markets where ChatGPT operates, offering messaging, image generation, file uploads, and memory features. In the coming weeks, OpenAI will begin testing ads with logged-in adult users in the U.S. on both free and Go tiers, while Pro, Business, and Enterprise subscriptions remain ad-free.

The initial ad format places sponsored product or service recommendations at the bottom of ChatGPT responses when relevant to the conversation. OpenAI stressed that ads won’t influence the answers the model provides, with responses optimized solely for usefulness. Conversations remain private from advertisers, and user data won’t be sold. Users can disable ad personalization and clear advertising data whenever they choose.

OpenAI outlined restrictions on ad placement: no ads will appear for users under 18 or in conversations involving sensitive topics like health, mental health, or politics. Each ad will be clearly labelled and separated from organic content, with options to dismiss ads or learn why they appeared.

The company framed the move as necessary for making AI tools accessible to more people without subscription barriers. Looking ahead, OpenAI suggested interactive ad formats could let users ask follow-up questions about advertised products directly within the chat interface.

The ad platform represents OpenAI’s push toward a diversified revenue model alongside its existing enterprise and subscription businesses. Testing begins in the coming weeks with plans to refine the approach based on user feedback.

🛠️ Developer Tools

Cursor Doubles Down on Code Quality with Bugbot Improvements

Cursor published a detailed breakdown of how it developed and refined Bugbot, its AI-powered code review agent that scans pull requests for logic errors, performance problems, and security flaws. Since launching the first version in July 2025, the company has run 40 experiments that have more than doubled the number of resolved bugs per pull request, from 0.2 to approximately 0.5.

The improvement came from two key developments: creating a measurable metric and switching to an agentic architecture. Cursor built a “resolution rate” metric that uses AI to identify which flagged bugs developers actually fixed in their final code, providing the team with a quantitative basis for testing changes rather than relying on subjective assessments. Many experimental configurations that seemed promising through qualitative review actually hurt performance when measured against real resolution data.

The biggest quality jump arrived when Cursor moved from a fixed pipeline to an agentic design. The earlier system ran eight parallel passes with randomized diff ordering, then used majority voting to filter results. The agent-based approach lets the model reason over code, call tools, and decide where to investigate further rather than following predetermined steps. This shift required inverting the prompting strategy, encouraging aggressive investigation instead of restraint to avoid false positives.

The agent architecture also enabled dynamic context loading. Instead of providing all information upfront, the system lets the model pull in additional context as needed during runtime. Tool design became a critical factor, with small adjustments to available tools producing significant behavioural changes.

Bugbot now reviews over 2 million pull requests per month for companies including Rippling, Discord, Samsara, and Airtable. Cursor recently launched Bugbot Autofix in beta, which automatically generates fixes for discovered bugs. Upcoming features include code execution for verifying bug reports, deep research capabilities for complex issues, and continuous scanning that runs independently of the pull request workflow.

⚡ Infrastructure

xAI Launches Gigawatt-Scale Colossus 2 Supercomputer

xAI brought its Colossus 2 supercomputer online this week, marking the first AI training cluster to reach gigawatt scale. Elon Musk announced that the system currently operates at 1 gigawatt and will expand to 1.5 gigawatts in April, with plans to eventually reach roughly 2 gigawatts in total capacity. Even at current levels, Colossus 2’s power consumption exceeds San Francisco’s peak electricity demand.

The infrastructure supports xAI’s work on the Grok large language model. Combined with its predecessor Colossus 1, the two systems now represent more than one million H100 GPU equivalents. The buildout has moved at a notable speed. Colossus 1 went from site preparation to full operation in 122 days, while Colossus 2 crossed the gigawatt threshold on a similar timeline.

The launch follows xAI’s recently closed Series E funding round, which raised $20 billion after the company upsized from an initial $15 billion target. The round drew backing from Valor Equity Partners, Stepstone Group, Fidelity Management & Research Company, Qatar Investment Authority, MGX, and Baron Capital Group, with continued strategic support from NVIDIA and Cisco.

xAI stated the capital will fund infrastructure expansion, rapid deployment of AI products, and research aligned with its stated mission of understanding the universe. The company recently released the Grok 4 series along with Grok Voice and Grok Imagine capabilities, and has already begun training its next flagship model, Grok 5.

📊 Research & Analysis

Anthropic Reports Claude Usage Concentrated in Coding, With Mixed Productivity Impact

Anthropic released its fourth Economic Index, analyzing how Claude is used across consumer and enterprise contexts, introducing new metrics it calls “economic primitives” that measure task complexity, skill requirements, use cases, AI autonomy, and success rates. The analysis examined one million Claude.ai conversations and one million API records from November 2025, just before the Opus 4.5 release.

Usage remains heavily concentrated despite model improvements. The top ten tasks account for 24% of Claude.ai conversations, with software debugging alone representing 6% of activity. Computer and mathematical tasks make up one-third of consumer usage and nearly half of API traffic, though this represents a slight decline from earlier peaks.

Task success correlates inversely with complexity. While Claude achieves 70% success on tasks requiring less than a high school education, this drops to 66% for college-level work. The research found that more complex tasks yield greater time savings, up to 12x speedup for university-level prompts compared to 9x for high school equivalents, but this advantage diminishes when reliability is factored in.

Geographic patterns show striking differences in how Claude is deployed. Work use cases dominate in higher-income countries, while coursework use is most common in lower-income nations. Personal use increases with both adoption rates and GDP per capita. Within the United States, usage is becoming more evenly distributed across states, with projections suggesting parity could arrive in two to five years if current trends hold.

The report addressed productivity implications with updated estimates. Earlier work suggested widespread AI adoption could boost U.S. labor productivity by 1.8 percentage points annually over the next decade. After adjusting for task success rates and potential bottlenecks where certain tasks remain essential, that estimate falls to 1.0-1.2 percentage points—still economically significant but roughly half the unadjusted figure.

Anthropic found that Claude tends to handle higher-education tasks, which could create a “deskilling” effect if AI removes the more complex components of jobs while leaving routine work behind. Technical writers and travel agents would see this pattern, while occupations like property management might experience upskilling as administrative tasks shift to AI, leaving higher-level negotiation and stakeholder management.

The research noted that how users prompt Claude directly shapes response sophistication, with a 0.92 correlation between the education levels required to understand prompts versus responses. API usage shows consistently higher speedups than consumer interactions but lower task success rates, likely reflecting its single-turn nature versus multi-turn conversations that allow iteration and correction.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.