This week in AI, we explore significant developments across the industry—from Google’s global expansion of AI Overviews in search to the Linux Foundation’s launch of the Open Model Initiative. Additionally, xAI introduces the powerful Grok-2 language model, outpacing competitors in key benchmarks, while Anthropic’s new prompt caching feature promises substantial cost and speed improvements for developers. Plus, discover how Pickaxe is enabling entrepreneurs to build and monetize GPT-4-powered apps without writing a single line of code. Explore the details below.

Linux Foundation Launches Open Model Initiative for AI

The Linux Foundation has introduced the Open Model Initiative (OMI), a new effort to promote the development of openly licensed, ethical AI models. This initiative is designed to address the barriers to enterprise adoption created by restrictive licensing, focusing on creating high-quality AI models that are free to use and foster innovation.

The initiative has outlined several key objectives:

1. Establish a governance framework.

2. Gather feedback from the open-source community on future AI model research and training needs.

3. Enhance model interoperability and metadata practices within the AI community.

4. Develop and begin captioning a dataset for AI model training.

5. Complete an alpha test version of the AI model with targeted red teaming for security and ethical considerations.

6. By the end of the year, provide the community with an alpha version of the model and fine-tuning scripts.

Jim Zemlin, Executive Director of the Linux Foundation, emphasized the importance of open and collaborative AI development, highlighting OMI’s role in making AI accessible and beneficial for all. OMI invites participation through its Discord and GitHub communities for those interested in contributing.

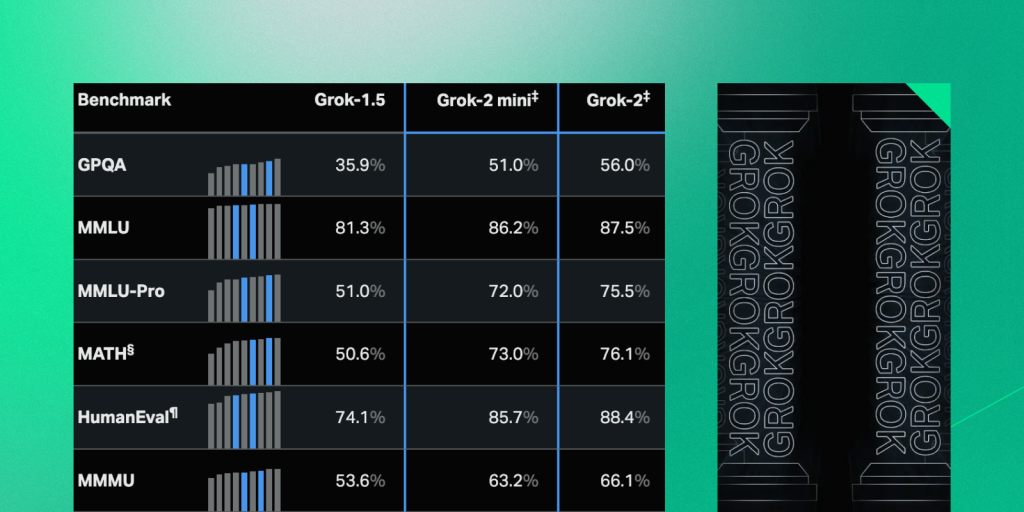

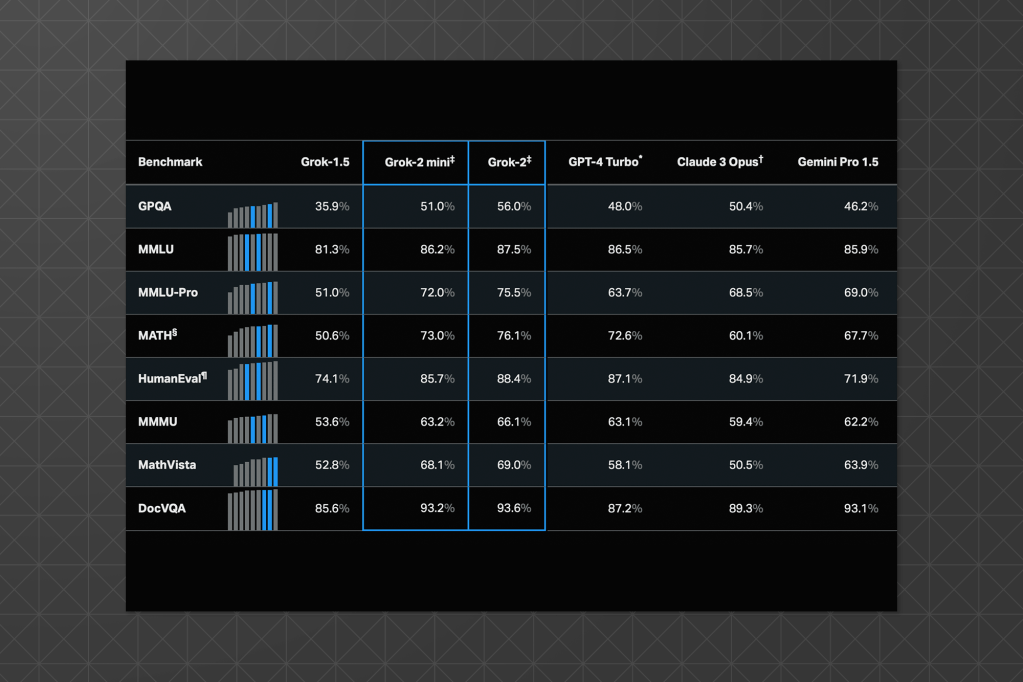

Grok-2 Beta Release: Frontier AI Model from xAI

xAI has introduced Grok-2, a cutting-edge language model with advanced reasoning capabilities, alongside its smaller counterpart, Grok-2 mini. Both models are now available to users on the 𝕏 platform, with an enterprise API release planned for later this month.

Grok-2 has already demonstrated its prowess on the LMSYS leaderboard, outperforming models like Claude 3.5 Sonnet and GPT-4-Turbo in several benchmarks, including reasoning, reading comprehension, and coding. The model excels in vision-based tasks and document-based question answering, making it a formidable competitor in AI.

𝕏 Premium and Premium+ users can directly experience Grok-2’s enhanced capabilities through the app, benefiting from real-time information integration and a more intuitive user experience.

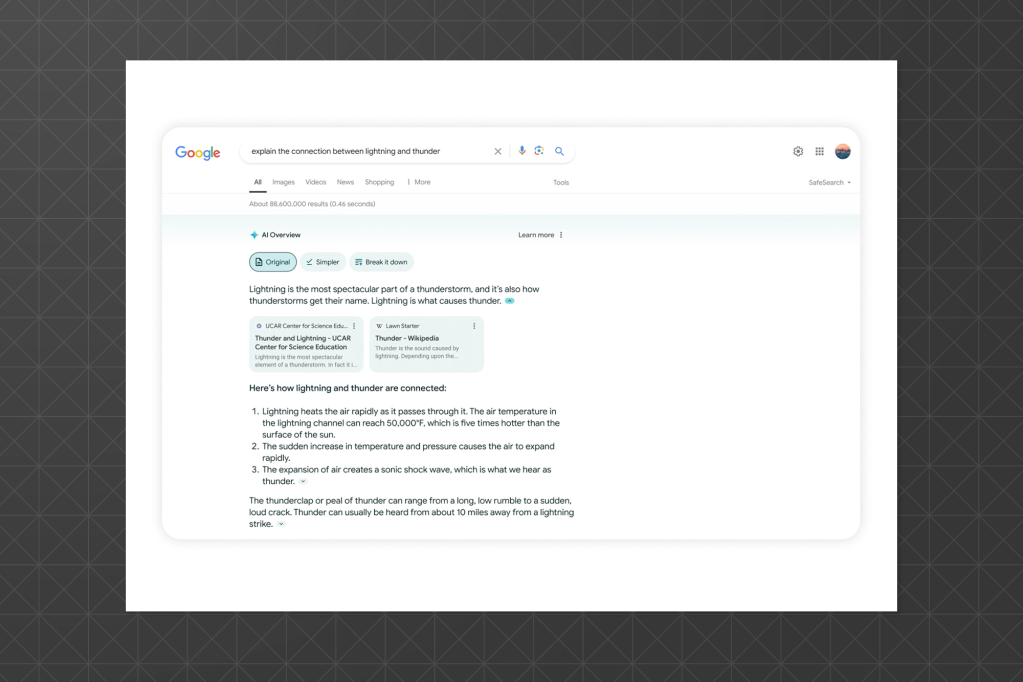

Google Search Expands AI Overviews Globally

After launching in the US earlier this year, Google is rolling out its AI Overviews feature to six new countries—the UK, India, Japan, Indonesia, Mexico, and Brazil. This feature, which uses AI to summarize search results, now includes new functionalities like a save option and experimental in-text links within summaries.

While Google claims these enhancements drive more website traffic, the long-term impact on content creators and publishers remains under scrutiny. SEO experts express concerns that AI-generated summaries may reduce the need for users to visit external sites, sparking debates about the future of referral traffic.

As the global rollout progresses, Google’s ability to balance AI assistance with driving organic traffic will be closely watched.

Anthropic Introduces Prompt Caching for Claude Models

Anthropic has launched prompt caching in public beta for its Claude 3.5 Sonnet and Claude 3 Haiku models, with support for Claude 3 Opus coming soon. This feature allows developers to cache frequently used context between API calls, significantly reducing costs and latency for long prompts.

Prompt caching proves beneficial in scenarios such as conversational agents, coding assistants, and large document processing, where it enables more efficient reuse of prompt information. Early users report up to a 90% reduction in costs and an 85% decrease in latency for long prompts.

A major customer, Notion, has already integrated prompt caching into its AI-powered features, optimizing operations and enhancing user experiences.

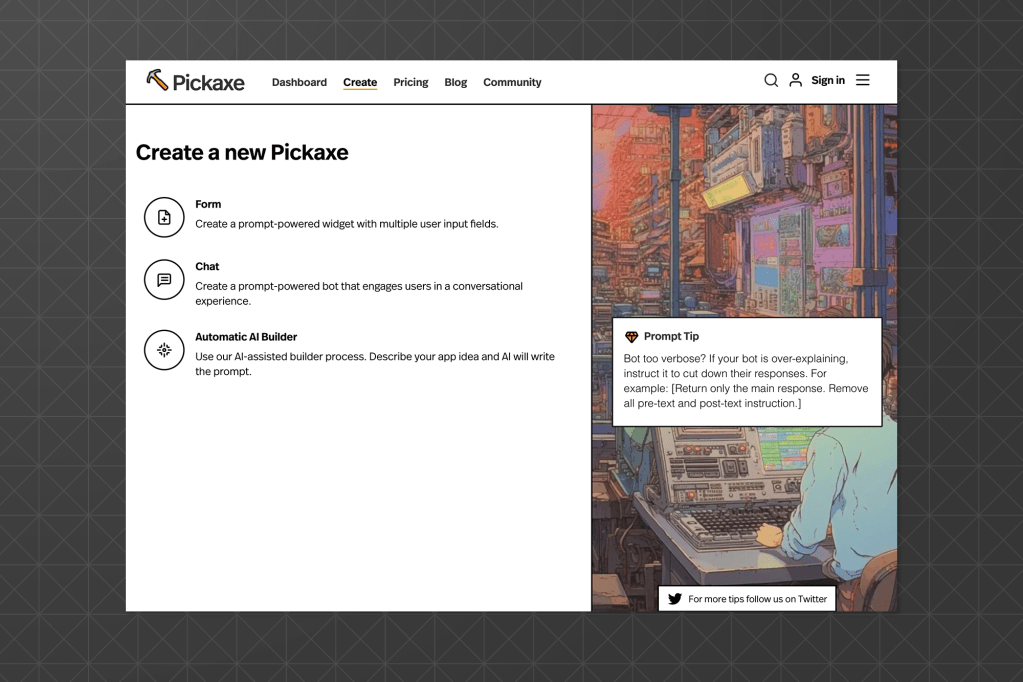

Weekly Tech Highlight: Pickaxe

This week’s featured tool is Pickaxe, a no-code platform that empowers users to easily create, share, and monetize GPT-4-powered apps. Designed for knowledge entrepreneurs, Pickaxe allows you to build AI tools using your own documents, data, and expertise—no coding required.

With Pickaxe, you can:

Create AI tools by leveraging prompts, documents, and more.

Share your AI-powered applications through a white-labelled web app branded with your identity.

Sell access to your apps and earn revenue from your knowledge and expertise.

Pickaxe offers a streamlined way to launch an AI storefront, perfect for industries ranging from coaching and consulting to wellness and education. You can even embed these GPT-4 tools directly on your website, providing a seamless experience for your users.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.