A note from the editor: This week, we’re stepping back from our usual format to highlight the year’s most significant releases. Not the loudest launches or the biggest benchmarks, but the tools that actually changed how teams work. Video generation that handles audio. Context windows that process entire codebases. Private model training for brand assets. Consider this your curated guide to what mattered most in AI’s first ten months of 2025.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google Notebook LM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

OpenAI: Intelligence at Scale

GPT-5: Smarter, Quieter Intelligence at Scale

Launch Date: August 7, 2025

GPT-5 didn’t try to reinvent how people interact with LLMs. It simply made them more useful across longer threads, deeper reasoning, and cleaner handoffs between quick tasks and complex ones. It now powers Copilot across Microsoft 365, sits behind Azure AI’s smarter agents, and runs improved completions for ChatGPT Enterprise. Less fanfare, more function. It drafts, it codes, it summarizes without breaking the mental model of how humans already work.

It’s also more expensive to run when switching into “deep thinking” mode. That mode makes sense for contracts, RFPs, and code. Not so much for everyday emails. Choosing when to invoke that depth is becoming part of the workflow strategy.

How to Apply GPT-5 in Your Workflow:

- Document Strategy: Use deep-thinking mode for high-stakes documents such as legal contracts, technical specifications, and strategic proposals. Reserve standard mode for routine correspondence and internal updates.

- Code Review Pipeline: Integrate GPT-5 into pull request reviews for complex architectural decisions while using faster models for style checks and documentation generation.

- Knowledge Management: Feed GPT-5 entire project histories or documentation sets, then use it as an intelligent search layer that understands context and relationships between documents.

- Meeting Prep: Upload background materials, previous meeting notes, and stakeholder briefs to generate informed talking points and anticipate discussion threads.

Sora 2: Text-to-Video That Understands Physics

Launch Date: September 30, 2025

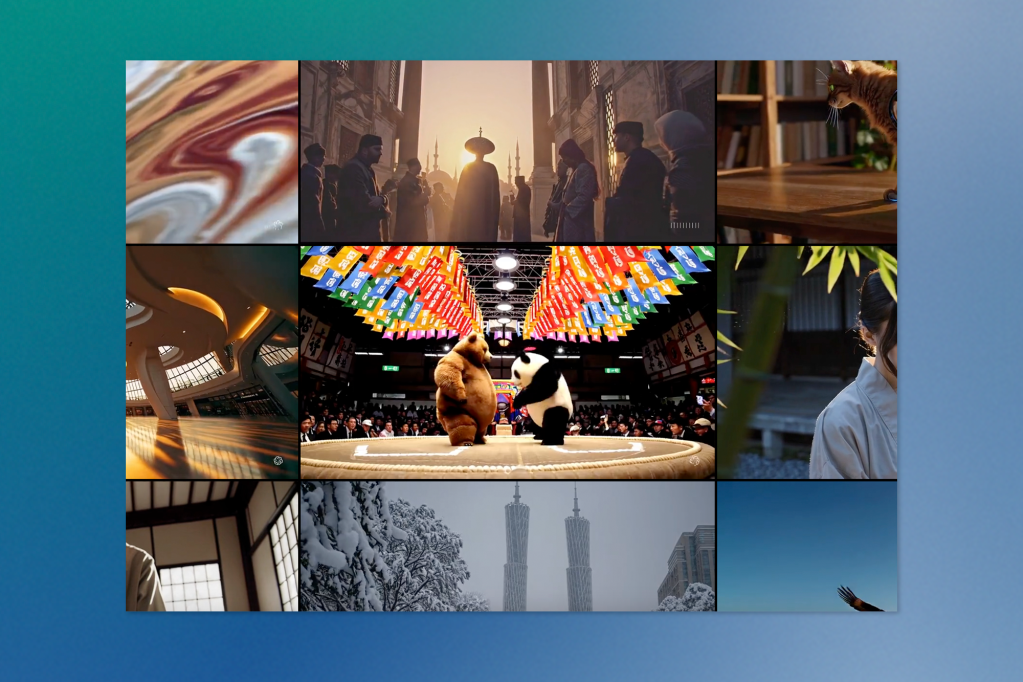

With Sora 2, OpenAI brought coherence to AI-generated video. Characters move naturally. Scenes follow physical logic. Audio syncs with action. It’s short-form, yes, but not shallow. Teams experimenting with product storytelling or internal prototyping use it to visualize concepts without hiring crews or building renders. Scenes are described, not directed. Edits happen via prompt, not timeline.

That said, realistic video with voice brings scrutiny. Consent, likeness, and legal guardrails aren’t optional. OpenAI added filters after high-profile complaints. Others will need to do the same.

How to Apply Sora 2 in Your Workflow:

- Product Visualization: Generate quick product demos showing features in action before committing to professional video production.

- Training Materials: Create internal how-to videos and onboarding content by describing scenarios rather than scripting and filming them.

- Concept Testing: Produce multiple versions of marketing concepts to test messaging and visual approaches with stakeholders before final production.

- Sales Enablement: Build customized demo videos for specific prospects, showing your product solving their particular use case.

- Legal Framework: Establish clear approval workflows that include legal review for any customer-facing video, and maintain documentation of generation parameters for audit trails.

Google: Multimodal Integration

Gemini 2.5 Pro: Multimodal With Memory

Launch Date: March 20, 2025

Long context has finally started to feel real. Gemini 2.5 Pro accepts up to 1 million tokens and doesn’t just regurgitate; it reasons through them. It’s showing up in everything from creative research (upload references, get variant concepts) to code scaffolding (digest docs, build flows). Its structured memory features allow smarter follow-ups, making it feel less like a chat window and more like a competent partner.

The catch? It’s resource-intensive. Feeding that kind of context takes planning and budget. But for high-stakes work (think architecture docs or scientific data), the payoff is substantial.

How to Apply Gemini 2.5 Pro in Your Workflow:

- Research Synthesis: Upload dozens of research papers, competitive analyses, or market reports, then ask for synthesized insights and pattern identification across the entire corpus.

- Codebase Understanding: Feed entire codebases or large subsystems to understand architectural decisions, identify technical debt, or plan refactoring strategies.

- Regulatory Compliance: Input compliance documents, policy manuals, and legal frameworks to get contextual guidance on specific business scenarios.

- Content Strategy: Load brand guidelines, past campaigns, competitor content, and market research to develop informed creative directions that account for the full landscape.

Imagen 4: Finally Nailing Typography

Launch Date: May 20, 2025

For designers, Imagen 4 fixed one of the most annoying issues in text-to-image models: fonts that don’t melt. It renders legible, brand-appropriate type in assets, enough to start seeing AI posters and signage in the wild. Paired with Gemini or Workspace tools, it lets teams build slide decks, ad mockups, and diagrams directly inside Google Docs or Slides.

Usage rights still matter. Just because it’s AI-generated doesn’t make it public domain. That’s why SynthID remains baked in, and why legal review isn’t going anywhere.

How to Apply Imagen 4 in Your Workflow:

- Presentation Design: Generate custom graphics with proper typography directly in Google Slides for internal presentations and client pitches.

- Social Media Assets: Create platform-specific graphics with on-brand text overlays without needing a designer for every variation.

- Marketing Mockups: Rapidly prototype ad concepts, landing page heroes, and campaign visuals with readable headlines and body copy.

- Signage and Print: Develop event signage, booth graphics, and internal wayfinding designs with legible type that matches brand standards.

- Rights Management: Implement a review process that verifies SynthID watermarks are present and maintains generation logs for asset provenance.

Flow: Directing AI Video Like a Filmmaker

Launch Date: May 20, 2025

Flow isn’t a model. It’s a directing tool. A place where prompts become scenes, and sequences get built one camera angle at a time. By abstracting the complexity of Veo and Imagen under a storyboard-like interface, Flow gives marketers, educators, and video teams a hands-on way to guide AI video without diving into code.

For prototyping and internal content, it’s already useful. For final output, careful post-review is still a must.

How to Apply Flow in Your Workflow:

- Storyboard Development: Rapidly visualize script concepts with multiple camera angles and shot compositions before committing to production.

- Internal Communications: Create executive updates, all-hands presentations, and training content without video production overhead.

- Educational Content: Build course materials and tutorials with consistent visual style and scene-by-scene control.

- Client Presentations: Develop pitch videos that show creative concepts in motion, helping stakeholders visualize the final product.

- Iterative Refinement: Use Flow’s scene-level editing to adjust individual shots without regenerating entire sequences, speeding up the revision process.

Gemini 2.5 Computer Use: UI Automation With AI Eyes

Launch Date: October 7, 2025

This wasn’t about chat. It was about getting things done. Gemini 2.5’s UI automation model acts as a real agent: screen in, action out. Finance teams use it to pull invoices from legacy portals. HR bots navigate old forms. Ops teams let it handle the annoying “click and paste” routines between non-integrated systems.

It’s fragile (GUI changes break flows), but it’s proving invaluable in spaces without good APIs. And for once, the bottleneck isn’t intent; it’s the interface.

How to Apply Gemini Computer Use in Your Workflow:

- Legacy System Integration: Automate data extraction from old ERP systems, HR platforms, or financial tools that lack modern APIs.

- Cross-Platform Workflows: Build bridges between disconnected tools (CRM to accounting, project management to reporting systems) without custom integration development.

- Repetitive Data Entry: Eliminate manual copy-paste work between systems for invoice processing, timesheet management, or inventory updates.

- Testing and QA: Automate UI testing for web applications, simulating real user interactions and validating workflows end-to-end.

- Maintenance Planning: Build monitoring systems that detect when GUI changes break automation flows, and maintain version control for screen interaction scripts.

Veo 3.1: Cinematic AI With Audio

Launch Date: October 15, 2025

Veo 3.1 brought audio into AI-generated video. Not just background music, but full synchronized soundscapes and dialogue options. Ad agencies and film schools alike are using it for early prototyping and pitch decks. It can recreate a narrated brand video with tone, movement, and speech that match a creative direction.

Still, there’s a gap between demo and delivery. Longer videos get expensive, and realism cuts both ways; it’s easier to mislead when outputs feel polished.

How to Apply Veo 3.1 in Your Workflow:

- Pitch Deck Videos: Create polished concept videos with narration for client presentations without recording voiceover separately.

- Ad Concept Testing: Generate multiple versions of video ads with different voice tones, pacing, and audio moods to test with focus groups.

- E-Learning Development: Produce educational content with synchronized narration, background audio, and visual instruction in one generation pass.

- Brand Video Prototypes: Develop complete audiovisual brand stories to align stakeholders before investing in professional production.

- Audio-Visual Consistency: Use Veo’s audio sync capabilities to ensure that generated sound effects, ambient noise, and dialogue match on-screen action naturally.

Adobe: Enterprise Creative Control

AI Foundry: Custom Generative Models for Brands

Launch Date: October 20, 2025

Adobe didn’t just refresh Firefly. With Foundry, it introduced something else entirely: a way for enterprises to train and deploy private generative models built on their own IP. Design and marketing teams use it to generate brand-consistent imagery, multilingual assets, or even entire campaign kits without leaking style data to public models. No prompt engineering. No GPU fiddling. Just creative direction that sticks.

But it’s not turnkey. Training costs are real. Reviews still matter. And what gets automated still needs a human to sign off. Even so, for companies with deep brand libraries, Foundry’s value is hard to ignore.

How to Apply Adobe Foundry in Your Workflow:

- Brand Asset Library: Train models on your complete brand archive to generate on-brand imagery, illustrations, and design elements that match established visual identity.

- Localization at Scale: Create culturally adapted creative assets for global markets while maintaining brand consistency across languages and regions.

- Campaign Variation: Generate dozens of creative variants for A/B testing, seasonal campaigns, or channel-specific adaptations from a single creative brief.

- IP Protection: Keep proprietary design styles, brand aesthetics, and creative approaches entirely within your organization without exposing them to public model training.

- Approval Workflows: Integrate Foundry outputs into existing creative review processes, treating AI-generated assets the same as human-created ones for quality control.

- ROI Analysis: Calculate training costs against the volume of assets needed and speed-to-market requirements to determine which creative use cases justify custom model training.

Meta: Open Source Innovation

Llama 4: Long Context, Openly Accessible

Launch Date: April 5, 2025

While proprietary models kept their tech close, Meta released Llama 4 with generous context windows and robust multimodal support. Startups love it. Researchers fine-tune it. Internal tool builders use it to power domain-specific agents without a license negotiation.

Its open nature is both a strength and a concern. Misinformation campaigns don’t need approval to use it. But responsible use and community pressure are already shaping norms, especially with Meta requiring oversight for high-scale deployments.

How to Apply Llama 4 in Your Workflow:

- Custom Domain Agents: Fine-tune Llama 4 on industry-specific terminology, internal documentation, or specialized knowledge bases for legal, medical, or technical applications.

- Cost-Effective Scaling: Deploy on your own infrastructure to control costs for high-volume applications like customer support bots or content moderation systems.

- Privacy-First Applications: Keep sensitive data entirely on-premises by running Llama 4 locally for healthcare records, financial analysis, or confidential research.

- Research and Development: Experiment with model architecture, training techniques, and novel applications without licensing restrictions or API rate limits.

- Community Integration: Leverage the open-source ecosystem’s fine-tuned variants, tools, and best practices developed by the Llama community.

Anthropic: Reliable Intelligence

Claude 4.5 Sonnet & Haiku: Speed, Memory, and Real Multi-Hour Agents

Launch Dates: September 29 & October 15, 2025

Anthropic’s Claude 4.5 update sharpened the balance between speed and depth. Sonnet 4.5 shows its strength in long-term reasoning, capable of maintaining session context for hours. Haiku 4.5 brings that power to real-time apps: fast, responsive, and cost-effective. Together, they show up in dev tools, IDEs, and support workflows. Sonnet tackles complex planning and bug triage. Haiku handles bulk ticket replies and frontend logic.

The combo is fast becoming a new pattern: plan with one, execute with the other. Memory remains a concern; teams must verify long chains of logic. But the improvements in safety and transparency are beginning to show.

How to Apply Claude 4.5 Sonnet & Haiku in Your Workflow:

- Two-Tier Architecture: Use Sonnet for complex reasoning tasks (system design, strategic analysis, technical documentation) and Haiku for high-volume execution (code formatting, ticket responses, data transformation).

- Long-Running Sessions: Leverage Sonnet’s multi-hour context retention for extended debugging sessions, complex project planning, or iterative document development.

- Development Workflow: Integrate Sonnet into IDEs for architectural decisions and code review while using Haiku for autocomplete, refactoring suggestions, and test generation.

- Customer Support: Deploy Haiku for first-line support responses with fast turnaround, escalating complex issues to Sonnet for detailed analysis and solution development.

- Cost Optimization: Route requests dynamically based on complexity, using Haiku’s speed and efficiency for 80% of tasks while reserving Sonnet for the 20% that require deeper reasoning.

- Verification Protocols: Implement checkpoints in long reasoning chains to validate intermediate conclusions, preventing compounding errors in extended sessions.

The Patterns Emerging in 2025

AI Audio Is No Longer an Add-On

Text-to-video isn’t enough anymore. Whether it’s Sora, Veo, or Flow, sound is now part of the output. Teams don’t want to write scripts and then manually mix. They want one prompt that delivers sight and sound together.

Workflow-Specific AI Is Winning

General models are getting better, but targeted ones (like Adobe’s Foundry or Flow’s scene builder) are proving sticky. When tools understand a specific job-to-be-done, they get used more often, not just more powerfully.

Safety Infrastructure Is Maturing (Slowly)

From watermarking to memory boundaries, vendors are building more thoughtful infrastructure around generative AI. It’s uneven and incomplete, but the direction is clear: trust and traceability are becoming part of the spec sheet.

Open and Proprietary Models Are Converging in Practice

The gap between open-source and proprietary models is narrowing functionally. Teams increasingly run hybrid architectures, using open models for privacy-sensitive tasks and proprietary ones for bleeding-edge capabilities.

Context Windows Are Becoming the New Battleground

Long context isn’t just a feature anymore; it’s a fundamental capability that changes how teams work. Models that can maintain coherent reasoning across millions of tokens are enabling entirely new workflows.

The Quiet Revolution Is Already Underway

Not everything shipped in 2025 made headlines. But behind the scenes, a shift is underway. The most impactful AI breakthroughs didn’t just raise benchmarks; they quietly changed how teams approach work. The tools listed here aren’t just impressive. They’re useful. And that utility is what makes them stick.

Looking to integrate intelligent tools into your creative, development, or content workflows? Trew Knowledge helps enterprises design, build, and scale AI-powered digital experiences using the best of what shipped (and what’s still coming). Let’s connect.