Artificial intelligence reached an inflection point this week as major institutions stopped experimenting and started deploying. From banks granting AI systems their own employee logins to tech giants forming multi-million-dollar partnerships with teachers’ unions, we’re witnessing AI’s transition from a promising technology to an operational necessity. The developments reveal how different sectors are wrestling with the same fundamental challenge: integrating AI capabilities while preserving the human elements that define their industries.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google Notebook LM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

Banks Embrace AI as Digital Employees

Major financial institutions are moving beyond traditional automation to deploy AI systems that function more like human workers. Bank of New York Mellon has created what it calls “digital employees” – AI systems with their login credentials that operate autonomously within specific teams. These AI workers handle tasks such as identifying and fixing code vulnerabilities and validating payment instructions, reporting directly to human managers, just like their flesh-and-blood colleagues.

The approach represents a shift in how banks think about AI integration. Rather than building separate AI tools, institutions are creating systems that plug directly into existing workflows and enterprise software. BNY’s digital workers can access the same applications as human employees, complete tasks independently, and escalate issues to their managers when needed. The bank plans to expand access to email and collaboration platforms like Microsoft Teams, allowing these AI systems to proactively communicate with human supervisors when they encounter problems beyond their capabilities.

What sets BNY apart is the degree of autonomy granted to these systems. Unlike traditional AI assistants that provide suggestions or support, these digital employees can actually implement solutions, such as writing and deploying code patches for security vulnerabilities. However, human approval is still required for significant changes. The bank developed two distinct AI personas over three months, with each specializing in specific functions and deployable across multiple teams while maintaining strict data access controls.

Other financial institutions are taking more conservative approaches. Goldman Sachs recently launched an AI assistant that helps employees with coding suggestions, document summarization, and language translation – a helpful but far more limited offering compared to BNY’s autonomous digital workers. JPMorgan Chase’s leadership views the digital employee concept as a useful framework for business teams to understand AI capabilities, while acknowledging these systems require fundamentally different management approaches than traditional software.

The banking sector’s embrace of AI workers comes amid broader concerns about job displacement. Some experts predict that AI could eliminate significant portions of entry-level white-collar positions within the next few years, potentially leading to unemployment spikes. However, BNY emphasizes that it’s not slowing human hiring, despite expanding its digital workforce, positioning AI as an addition rather than a replacement for human talent.

UK and Singapore Partner on AI Financial Regulation

While banks like BNY are charging ahead with AI implementations, regulators worldwide are scrambling to create frameworks that can govern these rapidly evolving systems. The UK and Singapore have formed a strategic alliance to tackle one of the most pressing challenges in modern finance: how to regulate AI without stifling innovation. During their tenth annual Financial Dialogue in London, representatives from the UK’s Financial Conduct Authority and Singapore’s Monetary Authority moved beyond typical diplomatic pleasantries to address practical implementation challenges.

The partnership focuses on immediate applications rather than distant promises. Both countries are exploring how AI can enhance risk assessment capabilities, improve fraud detection systems, and enable more personalized financial services while maintaining strict regulatory compliance. A key concern driving discussions is the “explainability” problem – how financial institutions can satisfy regulators while leveraging AI systems that often operate as black boxes.

The collaboration extends beyond AI governance to broader fintech innovation. Both nations are advancing the Project Guardian asset tokenization initiative and exploring the UK’s Global Layer One project, which aims to create open, interoperable blockchain infrastructures with built-in regulatory compliance. These efforts reflect a recognition that emerging technologies require coordinated international approaches rather than fragmented national responses.

What makes this partnership particularly significant is its practical focus and clear timeline. Officials plan to reconvene before the next full dialogue in Singapore in 2026, with specific deliverables around sustainable finance and AI implementation frameworks. If successful, the UK-Singapore model could serve as a template for other financial centers facing similar regulatory challenges.

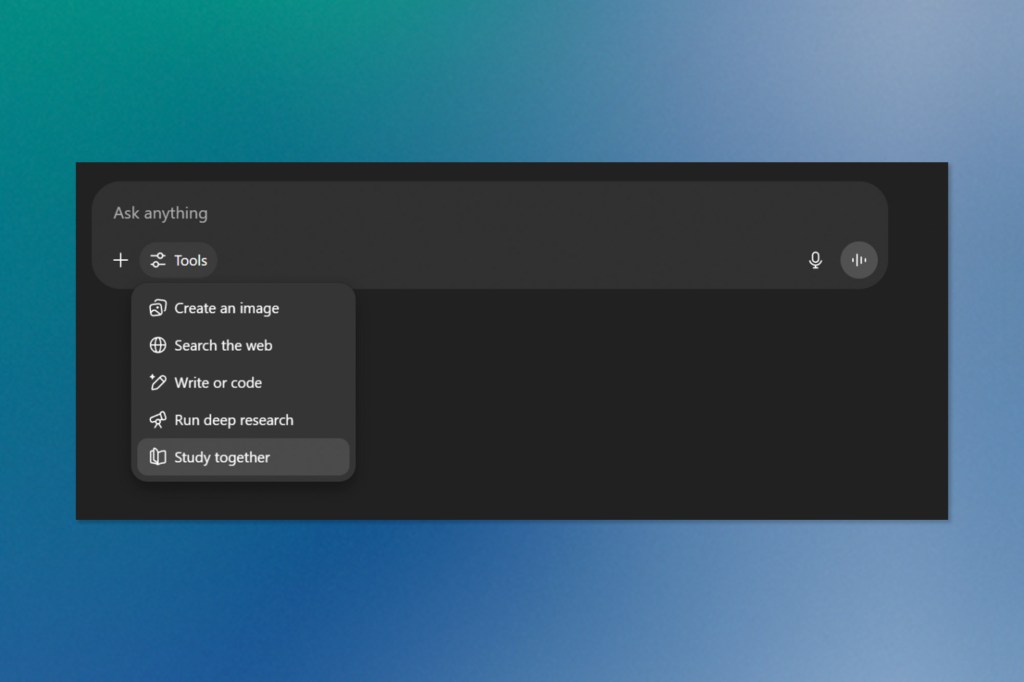

OpenAI Tests “Study Together” Feature

Beyond the boardrooms of major banks, OpenAI is quietly experimenting with how AI can reshape another critical sector: education. Some ChatGPT subscribers have spotted a mysterious new feature called “Study Together” appearing in their dropdown menus, suggesting the company is developing a more sophisticated approach to AI-powered learning.

Unlike ChatGPT’s typical question-and-answer format, this experimental feature flips the script entirely. Instead of providing direct answers to student queries, “Study Together” appears designed to act more like a Socratic tutor, asking probing questions and requiring students to work through problems themselves. The approach mirrors Google’s LearnLM initiative and represents a significant shift in how AI companies approach educational applications.

The timing is significant. ChatGPT has become ubiquitous in classrooms and study sessions, but its impact has been decidedly mixed. While teachers embrace it for creating lesson plans and educational materials, students often use it as a shortcut to complete assignments rather than actually learning the material. Some educators have warned that AI tools could undermine the entire foundation of higher education by making traditional assessment methods obsolete.

OpenAI’s “Study Together” experiment suggests that the company recognizes this tension and is trying to navigate the balance between utility and academic integrity. By forcing students to engage actively rather than passively consuming AI-generated answers, the feature could help preserve the educational value that critics worry is being lost.

The name itself hints at another intriguing possibility: collaborative learning sessions where multiple students could work together with AI guidance. If implemented, this could transform ChatGPT from an individual productivity tool into a platform for group study and peer learning.

While OpenAI hasn’t officially announced the feature or provided details about its broader rollout, the quiet testing phase reflects the company’s cautious approach to educational applications.

Tech Giants Team Up With Teachers’ Union on AI Training

While OpenAI experiments with individual learning features, the broader tech industry is taking a more systematic approach to AI in education. Microsoft, OpenAI, and Anthropic are collaborating with the American Federation of Teachers to launch the National Academy for AI Instruction, a $22.5 million initiative aimed at training educators nationwide on integrating AI tools into their classrooms.

The academy, slated to be based in New York City, represents a significant escalation in how tech companies approach educational partnerships. Rather than simply selling products to schools, these firms are now directly training the teachers who will shape how an entire generation learns to work with AI. The program aims to equip kindergarten through 12th-grade instructors with “the tools and confidence to bring AI into the classroom in a way that supports learning and opportunity for all students.”

The timing reflects the urgency many educators feel about AI adoption. Schools have struggled to keep pace with students who are already using ChatGPT, Copilot, and other AI tools for everything from homework help to essay writing. While some institutions have deployed detection tools to catch AI-assisted cheating, others are embracing these technologies as legitimate educational aids when used appropriately.

American Federation of Teachers president Randi Weingarten has emphasized that educators must have input into how AI transforms their profession, rather than having changes imposed upon them. The new academy could provide teachers with the knowledge needed to evolve their curriculum for a world where AI literacy becomes as fundamental as traditional computer skills.

However, the partnership is likely to face criticism from union members concerned about corporate influence in the education sector. Tech giants like Google, Apple, and Microsoft have long competed to establish their tools in schools, hoping to create lifelong users. The collaboration comes as some academics are pushing back entirely – professors in the Netherlands recently called for universities to ban AI use in classrooms and reconsider financial relationships with AI companies.

The initiative builds on Microsoft’s existing partnership with the AFL-CIO, which the American Federation of Teachers is a part of, suggesting a broader strategy to work directly with labour organizations as AI transforms various industries. With the AFT representing 1.8 million workers, including teachers, school nurses, and college staff, the academy could influence how millions of students first encounter AI in their educational journey.

HousingWire AI Summit Returns to Focus on Real Estate Innovation

As AI transforms finance and education, the real estate industry continues building momentum around artificial intelligence adoption. The HousingWire AI Summit is scheduled for August 12th at the George W. Bush Presidential Center in Dallas, bringing together executives and technology professionals for another year of focused learning on the impact of AI across the mortgage, real estate, and title industries.

The summit’s continued emphasis on “tangible AI improvements” reflects the maturation of the real estate sector’s approach to artificial intelligence. Real estate’s gradual but steady embrace of AI represents a significant shift for an industry traditionally built on personal relationships and established processes. The fact that HousingWire is hosting another dedicated AI summit signals that artificial intelligence has evolved from an experimental technology to an essential business tool within the housing market. By maintaining its focus on practical implementation over theoretical possibilities, the summit positions itself as a bridge between AI innovation and real estate execution. For an industry where trust and accuracy are paramount, the emphasis on proven results rather than promises could accelerate the adoption of AI tools that genuinely improve the home buying and selling experience.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.