Adobe MAX 2025 didn’t set out to impress with spectacle. It didn’t have to. The signals were there, threaded through product demos, keynote language, and quiet previews. Not louder tools. Smarter ones. Interfaces that interpret creative intent. Assistants that understand sequence and priority. Models that don’t just generate, but respond to patterns over time.

What emerged wasn’t a showcase of automation. It was a study in adaptation. Real-time audio layered into timelines with a single prompt. Custom AI trained on a brand’s visual DNA. A browser tab transforming into a full editing suite. Each detail calibrated for how teams already work, not how software used to expect them to.

This wasn’t creativity handed over to AI. It was a redefinition of who initiates, who refines, and how quickly the loop between vision and output can close.

A Reinvented Creative Stack for 2025

Generative AI as a Native Experience

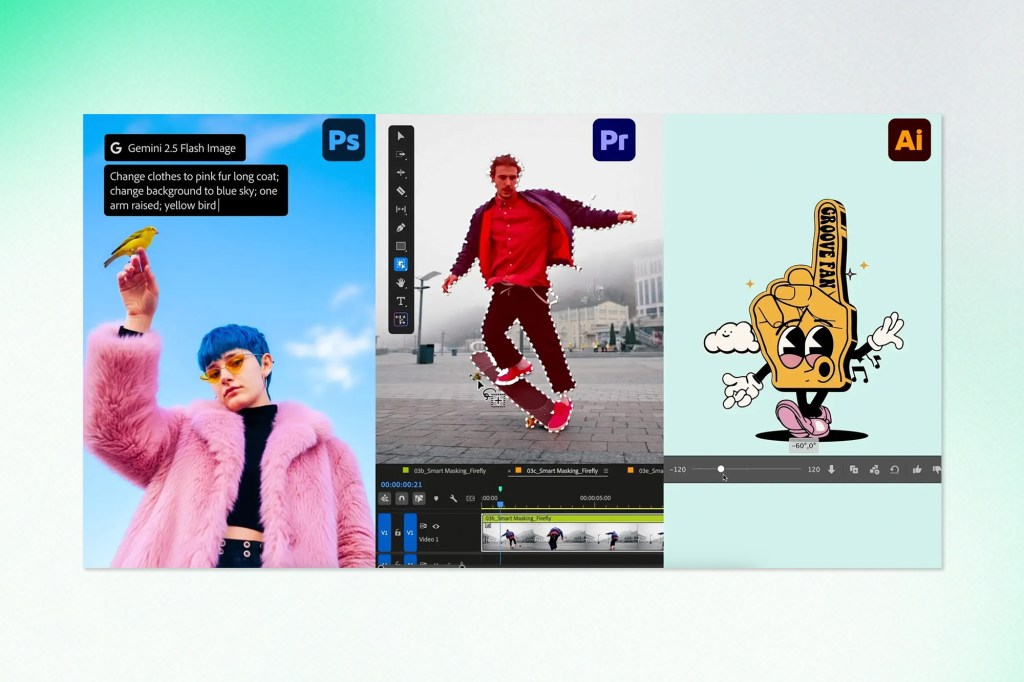

Adobe’s approach to AI this year wasn’t about adding more filters or standalone tools. Instead, generative AI was embedded directly into the interfaces of the Creative Cloud apps. The new Firefly Image Model 5 (now in public beta) outputs high-res 4MP assets with far greater control, enabling designers to edit, layer, and remix content without leaving Photoshop or Illustrator.

More importantly, Firefly now supports multi-model selection. That means creators can toggle between Adobe’s model, Google’s Gemini 2.5 Flash, Black Forest Labs’ FLUX.1, or other emerging tools, all from within the same interface. This shift opens the door for a broader creative palette while maintaining Adobe’s familiar UX.

AI Assistants Go Conversational

Not every task starts with a mouse click. Adobe leaned into that reality by expanding its AI Assistant (beta). Now available in Photoshop (web) and Adobe Express, this assistant interprets natural-language prompts and executes multi-step actions, including cropping, styling, adjusting, and even offering alternatives.

It’s not just prompt-to-image. It’s now prompt-to-process.

In Photoshop, the assistant understands the project’s context. In Express, it handles layouts, copy, and even brand-specific constraints. And in both, it’s not meant to replace decision-making. It simply reduces the friction between the idea and the output.

Introducing Project Moonlight

Among the most forward-looking previews at MAX was Project Moonlight, Adobe’s concept for a cross-platform, agentic assistant that coordinates creative workflows across apps and channels.

Unlike app-specific assistants, Moonlight connects to Creative Cloud libraries, Firefly Boards, and even social media accounts to understand what a user has created, what performs well, and what’s next on the roadmap. It helps structure entire content cycles, not just isolated tasks.

Whether suggesting assets for a new campaign based on style history, or mapping out an entire social media series based on past engagement, Moonlight works more like a strategic co-pilot than a simple chatbot. It’s currently in private beta, with early use cases focused on social teams and cross-functional creative operations.

Key Creative Cloud App Upgrades

Photoshop’s Expanding AI Toolkit

Photoshop 2025 brings nuance to generative editing. New features include:

- Harmonize: Automatically adjusts colour, tone, and lighting to blend objects into new backgrounds

- Generative Upscale: Built with Topaz Labs, this feature increases image resolution with preserved texture

- Multi-model Generative Fill: Toggle between multiple AI models for varied results

These upgrades reflect a deeper integration of machine learning into the design experience, adapting to creative context, rather than forcing it.

Illustrator Gets Smarter and Smoother

Illustrator saw less headline-grabbing changes but crucial updates nonetheless:

- Generative Expand: Resize vector illustrations with new content that blends seamlessly

- Performance Boosts: Large files now render faster, previews are snappier, and tool lag has been significantly reduced

Together, they make Illustrator better suited for both rapid concepting and high-fidelity print workflows.

Lightroom and Premiere Bridge Manual and Automated Editing

Lightroom introduced Assisted Culling, which uses AI to suggest the best photos in a shoot based on focus, composition, and expression. It doesn’t remove human review, but it narrows the field faster.

Premiere Pro got:

- AI Object Mask (beta): Auto-detects and isolates people or items for targeted edits

- New Mask Types: Including fast vector masks for sharper rotoscoping

- Better AI Audio Cleanup: Background noise suppression and multitrack balancing

Small shifts that make big differences when editing hours of content.

Firefly’s Evolution Into a Creative Operating System

Firefly Image Model 5 Pushes Output Quality Further

Firefly’s fifth-generation model supports:

- 4MP default resolution

- Layered image editing

- Improved photorealism and lighting

It’s designed not just for experimentation, but for final asset production, with more precise control and repeatability.

From Still to Sound – Generative Audio and Voice

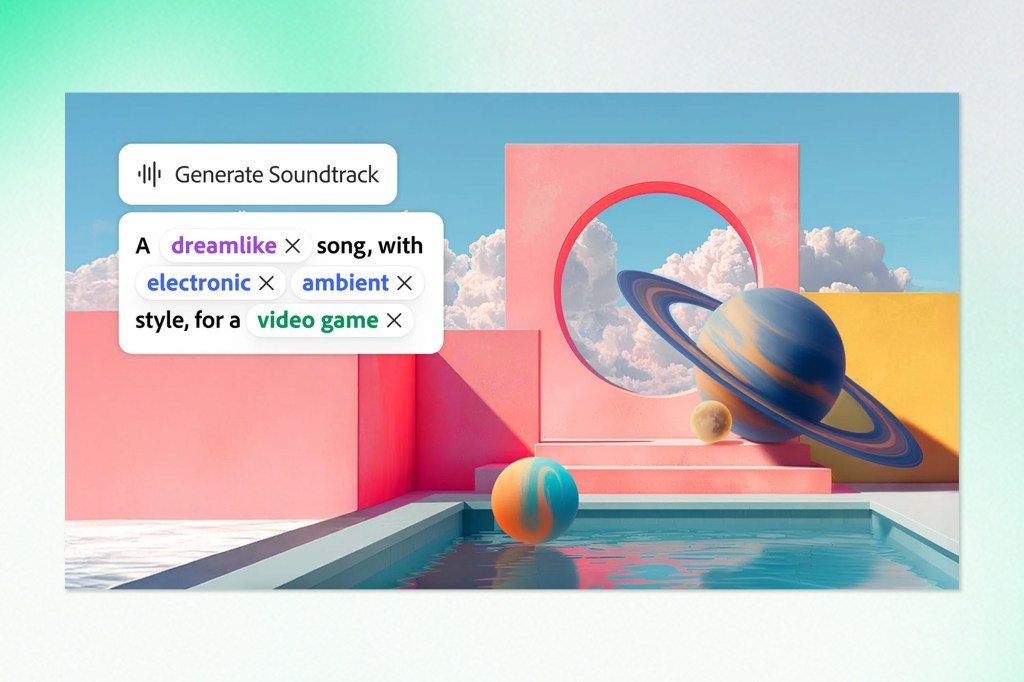

Firefly now handles sound. Two new tools:

- Generate Soundtrack: Compose synchronized music with style and tone controls

- Generate Speech: Add natural voiceovers using Adobe and ElevenLabs models

These tools are particularly useful for fast-turnaround video content, marketing assets, or any format where voice and music elevate the message.

Firefly Boards and Video Editing in the Browser

The Firefly Board interface was enhanced with:

- PDF export and batch downloads

- Rotate Object for basic 3D perspective

- Quick Revision tools and Generative Text Editing for brand language updates

The new Firefly Video Editor (browser-based) also brings Adobe’s design system closer to being a truly cloud-native creative suite.

A Platform That Welcomes Other Models

Partner AI Models Within Adobe Apps

Creative flexibility means choice. Adobe now lets users switch between Firefly and:

- Google Gemini 2.5 Flash

- Black Forest Labs FLUX.1

- Topaz Gigapixel (upscale)

- ElevenLabs (speech)

- …with more integrations coming

For creators, this means more accurate results tailored to the project with no plugins or third-party apps required.

New Enterprise-Grade Features for Creative Scale

For businesses, Adobe launched:

- Firefly Foundry: Enterprise teams can now train private generative models using internal brand assets and style guides

- GenStudio Enhancements: Plan, manage, and publish assets through integrations with platforms like TikTok, Amazon Ads, and Google Marketing Platform

- Express Enterprise Controls: Lock templates, streamline approval workflows, enable self-serve design within guardrails

These updates help large teams deliver high volumes of content without trading off brand consistency or creative quality.

Sneaks Spotlight: Experimental Futures

Clean Take, Corrective AI, and Agent-Driven Sound Design

Adobe’s research team previewed:

- Project Clean Take: Isolate dialogue, music, and ambient noise from a mixed audio track

- Corrective AI: Adjust the tone and emotional delivery of voice-overs by editing the script directly

- AI Audio Assistant: Suggests and places ambient sounds in videos based on context

These demos point toward AI that doesn’t just generate but edits, refines, and adapts in real time.

Connected Creativity Across Devices and Teams

Express as the Front Door to Creation

Adobe Express continues to evolve into a key content hub. It now offers:

- A design assistant powered by natural language

- Social-ready publishing pipelines

- Developer frameworks for custom add-ons

For teams that don’t live in Photoshop or Premiere, this becomes a practical entry point into the Adobe ecosystem.

Collaboration Tools Catch Up to the Content Lifecycle

Creativity rarely happens in isolation. Adobe added new layers of collaboration, including:

- Shared Photoshop “Projects” with versioning and comments

- Editable Firefly Boards for moodboarding and ideation

- Enterprise-grade template management inside Express

These updates bring Adobe’s creative tools closer to how modern teams ideate, produce, and distribute at scale.

The Infrastructure Behind the Creativity

The truth is that Adobe revealed an architecture built for connected creativity this year. MAX 2025 set the stage for workflows that are faster, smarter, and more responsive to how creative teams really work, rethinking the infrastructure of creation itself. Across tools, platforms, and partnerships, Adobe is laying the foundation for a creative ecosystem where models are interoperable, workflows are flexible, and teams of all sizes can produce more without losing sight of quality or context.