AI keeps moving fast. This week, we saw some big money flowing into Canadian infrastructure, new research showing which AI companies enterprises actually prefer, and fresh tools that let anyone build apps just by describing what they want. Plus, there’s the usual drama around AI-generated content and what that means for creative industries. Let’s dive into what’s happening.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google Notebook LM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

🇨🇦 Canadian Innovation Spotlight

Canadian Data Center Expansion Signals Infrastructure Investment for AI Workloads

Quebec-based Qscale announced plans for a major data center development near Toronto, with projected investment ranging between $1.8 billion and $2.9 billion USD. The facility represents a significant infrastructure expansion targeting AI and high-performance computing demands in Canada’s largest metropolitan market.

The project builds on Qscale’s existing Quebec operations, where the company has developed a 142MW campus in Lévis with HPE as an anchor customer. Construction on that initial facility began in 2021, providing the operational foundation for this Toronto expansion. The company has secured preliminary power validation from Hydro One, a critical milestone for large-scale data center development, though specific site selection remains ongoing.

Financing structures reveal substantial institutional backing for AI infrastructure investment. Qscale secured $320 million in funding from major Canadian financial institutions, including Desjardins Group and Scotiabank, alongside Export Development Canada. Additional investment from US operator Aligned Data Centers demonstrates cross-border recognition of growth opportunities in Canadian AI infrastructure.

The Toronto facility announcement follows established patterns in AI infrastructure development, where proximity to major tech centers and access to reliable power supplies drive location decisions. Toronto’s position as Canada’s technology hub, combined with favourable regulatory environments and power availability, makes it attractive for AI workload deployment.

Industry context suggests this expansion reflects broader demand for specialized computing infrastructure. As AI models require increasingly sophisticated hardware configurations and power management, purpose-built facilities like Qscale’s design become essential for supporting enterprise AI deployment at scale.

📊 AI Research & Market Analysis

New Research Shows Enterprise Market Realignment in AI

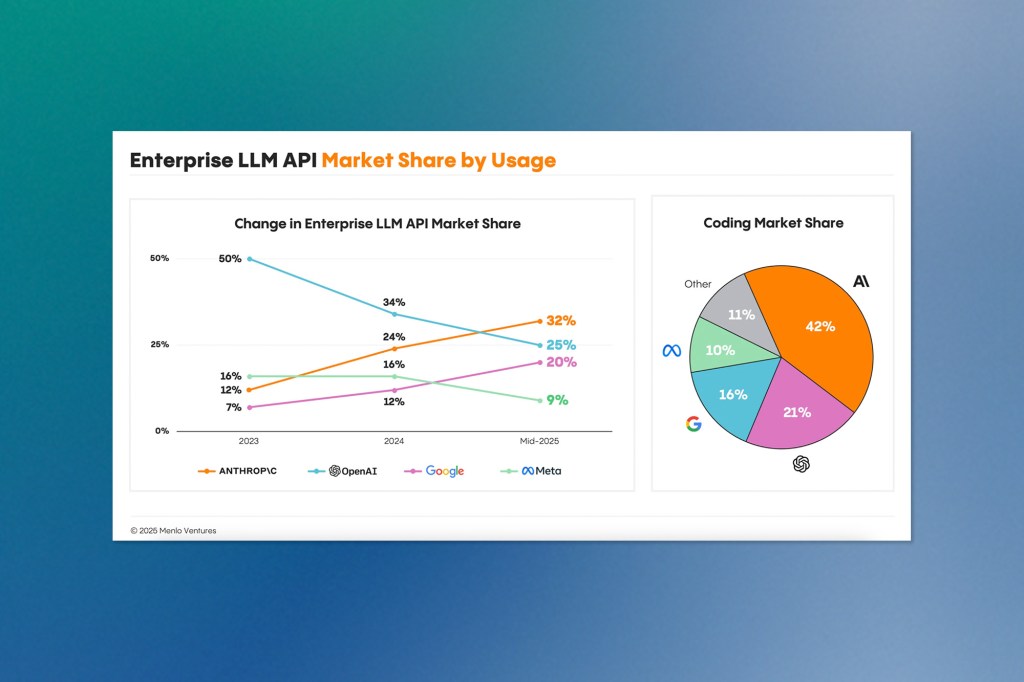

Menlo Ventures released fresh research revealing a dramatic shift in enterprise AI adoption patterns. Their comprehensive market analysis exposes how business preferences have fundamentally changed over the past two years, with implications that extend far beyond simple market share numbers.

The research demonstrates that Anthropic has achieved 32% of enterprise large language model usage, now leading the market ahead of OpenAI’s 25%. What makes this particularly noteworthy is the speed and scale of the transformation. The study tracked enterprise adoption patterns since 2023, showing OpenAI’s market position eroded from a dominant 50% share to its current quarter-share, while Anthropic climbed from just 12% to market leadership.

Perhaps most telling is the research’s findings on coding applications, where enterprise behaviour shows even stronger preferences. The data reveals Anthropic commands 42% of enterprise coding workflows, doubling OpenAI’s 21% presence in this crucial business function. This suggests that technical performance in development tasks has become a key differentiator for enterprise decision-makers.

The research methodology appears to track actual usage patterns rather than just procurement decisions, providing insight into how these tools perform in real business environments. The timing correlation between product releases and market movement offers additional validation—the study links Anthropic’s momentum directly to Claude 3.5 Sonnet’s launch in June 2024, with Claude 3.7 Sonnet’s February 2025 release further accelerating the trend.

The study also illuminates broader enterprise technology preferences, finding that over half of businesses avoid open-source AI models entirely. The research tracked a decline in open-source enterprise adoption from 19% at the start of 2025 to just 13% by mid-year, suggesting that business requirements increasingly favour commercial solutions with formal support structures.

These findings align with industry observations but provide the first quantitative framework for understanding how enterprise AI adoption has evolved beyond early experimental phases toward production-focused deployment decisions.

⚙️ New Models & Tools

OpenAI Shifts Strategy with First Open-Weight Models Since 2019

OpenAI released its first open-weight language models in nearly six years, marking a significant strategic pivot in response to competitive pressure from open-source alternatives. The company introduced gpt-oss models in two configurations—120 billion and 20 billion parameters—designed to run locally on consumer hardware, including laptops and mobile devices.

The models represent OpenAI’s entry into the open-weight ecosystem that has been dominated by Meta’s Llama series and recently disrupted by DeepSeek’s efficient architectures. According to OpenAI’s internal testing, the new models match or exceed the performance of their proprietary o3, o3-mini, and o4-mini systems on certain benchmarks, while remaining completely free for download and modification.

The timing reflects mounting competitive pressure following DeepSeek’s viral breakthrough, which demonstrated superior efficiency and performance at significantly lower computational costs. OpenAI’s previous business model relied heavily on API access to proprietary models hosted on the company’s infrastructure, making this open-weight release a notable departure from established revenue patterns.

CEO Sam Altman positioned the release as enabling broader research access and product innovation, suggesting OpenAI believes ecosystem expansion will ultimately benefit the company despite potential revenue cannibalization. The models focus exclusively on text generation and reasoning tasks, omitting image or video capabilities that remain exclusive to OpenAI’s commercial offerings.

Safety considerations prompted an extensive pre-release evaluation, particularly in biosecurity applications, where open-weight models present oversight challenges compared to hosted systems. OpenAI claims the models perform comparably to frontier systems on internal safety benchmarks while acknowledging inherent risks in releasing powerful capabilities beyond direct company control.

The strategic shift indicates a recognition that the momentum of open-source development may be unstoppable, making participation preferable to isolation. However, the company retains exclusive control over its most advanced multimodal capabilities and training methodologies, suggesting a hybrid approach that balances competitive positioning with revenue protection.

This release fundamentally alters the competitive landscape by bringing OpenAI’s reasoning capabilities to the open-weight ecosystem, potentially accelerating innovation while intensifying pressure on other proprietary model providers to reconsider their distribution strategies.

GPT-5 Arrives as Unified Model Combining Speed and Reasoning

OpenAI unveiled GPT-5 on Thursday, positioning it as the company’s first “unified” model that merges the reasoning capabilities of its o-series with the rapid response times of traditional GPT systems. The release represents OpenAI’s most significant product launch since ChatGPT’s debut, with CEO Sam Altman claiming it constitutes “the best model in the world” and a substantial step toward artificial general intelligence.

The model introduces an automated routing system that dynamically determines whether to provide quick responses or engage extended reasoning based on query complexity, eliminating the need for users to manually select appropriate model settings. This architectural approach enables GPT-5 to function more like an autonomous agent, capable of completing complex tasks such as software development, calendar management, and research compilation, rather than simply answering questions.

Performance benchmarks indicate that GPT-5 achieves competitive but not dominant results across various evaluation categories. On coding tasks using SWE-bench Verified, GPT-5 scored 74.9%, marginally outperforming Anthropic’s Claude Opus 4.1 at 74.5% and significantly ahead of Google’s Gemini 2.5 Pro at 59.6%. However, on Humanity’s Last Exam, GPT-5 Pro achieved 42% compared to xAI’s Grok 4 Heavy at 44.4%, indicating mixed performance across different skill domains.

The model demonstrates substantial improvements in accuracy and hallucination reduction compared to previous OpenAI systems. GPT-5 with thinking mode hallucinates in only 4.8% of responses, a dramatic improvement from o3’s 22% and GPT-4o’s 20.6% error rates. Healthcare applications show particularly strong performance, with hallucination rates dropping to just 1.6% on medical topics.

OpenAI’s deployment strategy diverges from previous patterns by making GPT-5 available to all free ChatGPT users as their default model, marking the first time the company has provided reasoning capabilities without requiring a subscription. This democratization approach aligns with stated mission goals while potentially pressuring competitors to reconsider their pricing structures.

Developer access includes three API variants—gpt-5, gpt-5-mini, and gpt-5-nano—with pricing at $1.25 per million input tokens and $10 per million output tokens. The simultaneous release alongside the open-weight gpt-oss models suggests OpenAI is pursuing a dual strategy of broad accessibility while maintaining competitive advantages in frontier capabilities.

Google DeepMind Advances AI Capabilities with Multi-Agent Systems and World Models

Google DeepMind unveiled two significant research developments this week, demonstrating parallel approaches to advancing artificial intelligence capabilities through specialized architectures and training environments.

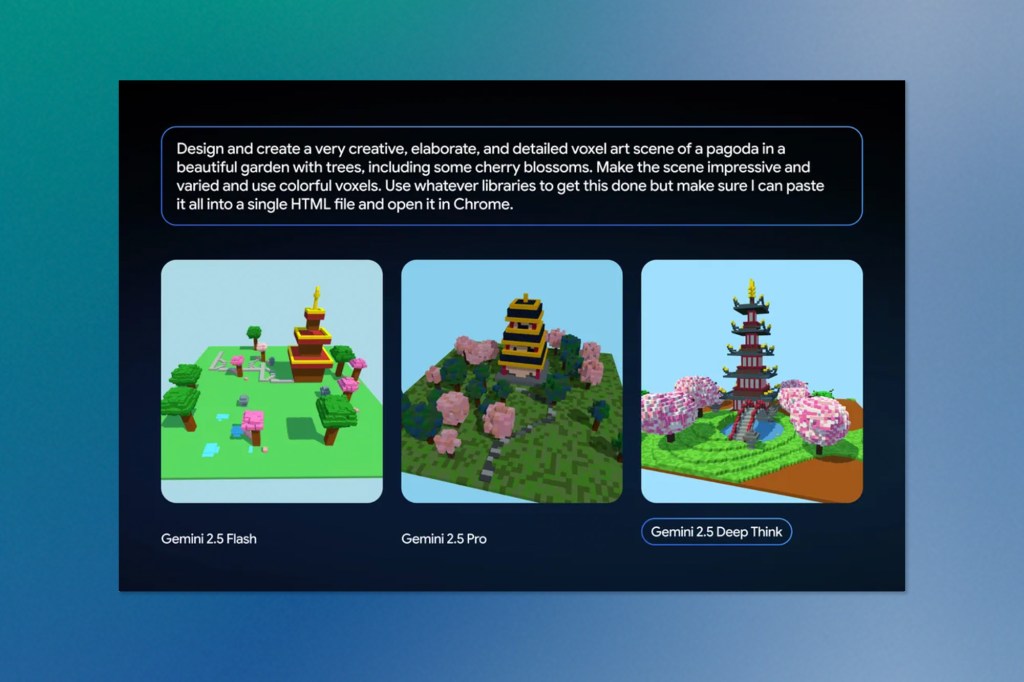

The company launched Gemini 2.5 Deep Think, its first publicly available multi-agent system that deploys multiple AI agents simultaneously to tackle problems from different angles before synthesizing optimal solutions. Available through Google’s premium Ultra subscription at $250 monthly, the system represents a fundamental shift toward parallel processing architectures that trade computational intensity for solution quality.

Performance benchmarks reveal substantial advantages over traditional single-agent approaches. On Humanity’s Last Exam, Gemini 2.5 Deep Think achieved 34.8% accuracy compared to competing models scoring in the 20-25% range. Coding performance showed even more pronounced improvements, with Google’s system reaching 87.6% on LiveCodeBench 6 while rivals achieved scores in the 70s.

Complementing this reasoning advancement, DeepMind introduced Genie 3, positioning it as foundational infrastructure for training general-purpose AI agents. The world model generates interactive 3D environments that last several minutes at 720p resolution and 24 frames per second, representing substantial improvements over predecessor systems, which were limited to 10-20 second sessions.

Genie 3’s architecture emphasizes temporal consistency through memory-based generation, enabling emergent understanding of physics without explicit programming of physical laws. The system demonstrated its potential through integration with DeepMind’s SIMA agent platform, where AI agents successfully completed navigation tasks in simulated warehouse environments while maintaining environmental coherence.

However, both systems face significant limitations. Multi-agent processing requires substantial computational resources, leading to premium pricing tiers, while Genie 3 struggles with complex physics interactions and supports only limited agent action ranges. Current world model sessions of several minutes fall short of the hours required for comprehensive agent training.

These developments reflect DeepMind’s dual strategy for advancing toward artificial general intelligence: enhancing reasoning capabilities through parallel agent architectures while simultaneously creating more sophisticated training environments for embodied AI systems. Both approaches address different bottlenecks in current AI development, suggesting convergence around specialized architectures optimized for distinct problem domains.

GitHub Spark Democratizes App Creation Through Natural Language Programming

GitHub launched public preview access to Spark, expanding its micro-app creation platform to all Copilot Pro+ subscribers. The tool represents GitHub’s entry into the emerging category of natural language programming environments, targeting hobbyists and non-professional developers who want to build functional applications without traditional coding expertise.

Spark’s architecture combines three integrated components designed to eliminate the traditional friction of development. Users describe application concepts in plain English through a natural language editor, while a managed runtime environment handles hosting, data storage, and AI model integration automatically. A progressive web app dashboard enables cross-device management and deployment with single-click publishing capabilities.

The platform leverages Claude Sonnet 4’s reasoning capabilities for initial code generation, then allows users to incorporate additional AI models from OpenAI, Meta, DeepSeek, and xAI for enhanced functionality. This multi-model approach enables developers to compare how different AI systems interpret identical requirements, potentially improving application quality through model diversity.

Development workflow innovations focus on reducing barriers to experimentation. Interactive previews display code execution in real-time as users type descriptions, while variant generation creates multiple implementation options for single requests. Automatic version history with one-click restoration encourages exploratory development without fear of breaking existing functionality.

The platform targets use cases that traditional development processes might not justify economically. Internal workflow automation, niche utilities, and temporary applications become viable when creation time drops from days or weeks to minutes or hours. Users can upload mockups, sketches, or documentation to guide development, bridging the gap between conceptual ideas and functional implementations.

Spark joins a growing ecosystem of natural language development tools, including Google’s recently announced Opal, alongside existing platforms such as Bolt.new and Lovable.dev. This convergence suggests the emergence of a new development paradigm where application creation becomes accessible to domain experts without programming backgrounds, potentially expanding the pool of software creators beyond traditional developer communities.

xAI Releases Unrestricted Image Generator with Controversial Content Policies

xAI launched Grok Imagine, introducing an image and video generation system that deliberately takes a permissive approach to content moderation. The tool, available to SuperGrok and Premium+ subscribers on X’s iOS application, can produce 15-second videos with audio from text or image inputs.

The system’s defining characteristic is its “spicy mode” functionality, which allows the generation of sexually explicit content, including partial nudity. This positions Grok Imagine as distinctly different from competitors like OpenAI’s DALL-E, Google’s Imagen, or other mainstream generators that implement stricter content filtering. The permissive approach aligns with Elon Musk’s broader positioning of Grok as an “unfiltered” alternative to existing AI systems.

Technical capabilities appear competitive with established players, generating images within seconds and offering automatic, continuous generation as users scroll through results. The interface supports both static image creation and video animation features, with the system converting still images into short-form video content.

However, content quality reveals the typical challenges facing newer generation models. Human figures often exhibit characteristics associated with the “uncanny valley” effect, displaying artificial-looking skin textures and facial features that appear somewhat cartoonish compared to photorealistic standards achieved by more mature systems.

The platform implements selective restrictions despite its permissive overall approach. Celebrity-based prompts encounter additional filtering, with attempts to generate inappropriate content featuring public figures being blocked or redirected toward more conventional outputs.

🌎 Industry Moves

Fashion Industry Embraces AI-Generated Models Amid Growing Controversy

The fashion industry’s adoption of AI-generated models reached a new visibility milestone this week when Guess featured digitally created figures in advertisements appearing in American Vogue’s August issue. The campaign, developed by London-based agency Seraphinne Vallora, generated significant public discussion after gaining viral attention on social media platforms.

The implementation reveals how AI modelling has evolved beyond simple digital creation. The process involves real models photographed in studio settings to understand how garments drape and move, with that reference material then informing the generation of synthetic figures. This hybrid approach aims to maintain product authenticity while leveraging AI’s scalability advantages.

Several major brands have quietly integrated AI models into marketing. Mango launched campaigns aimed at teenagers, Levi’s tested synthetic models to address diversity, and H&M created “AI twins” of real models for simultaneous campaign appearances while retaining rights ownership for the original model.

Industry practitioners suggest that widespread adoption may already be occurring without public disclosure, as there are no legal requirements mandating the disclosure of AI model usage in advertising. This invisible integration has created concerns among modelling professionals about job displacement and wage pressure, particularly as computational costs decrease and generation quality improves.

The debate also extends to representation. Critics argue AI models may reinforce narrow beauty standards and allow brands to simulate diversity without genuine inclusion. These synthetic figures often display conventionally attractive features, risking more unrealistic comparison standards.

However, proponents contend that AI modeling serves supplementary rather than replacement functions. They point to continued investment in traditional photography alongside AI tools, suggesting the technology enables expanded creative output rather than workforce reduction. The fashion industry’s global scale and rapid production cycles make AI particularly attractive for brands seeking to maintain visual consistency across multiple markets and product lines.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.