The race to integrate AI into every aspect of digital life accelerated this week. Canada made a power play for AI sovereignty, Microsoft turned browsers into AI assistants, Google reimagined search results, and a math-focused startup claimed to solve hallucinations entirely. Plus, thousands of data scientists are heading to Toronto to figure out what their jobs even look like anymore. Let’s dive in.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google Notebook LM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

🇨🇦 Canadian Innovation Spotlight

Bell Canada and Cohere Form Strategic AI Partnership

Canadian telecommunications leader Bell Canada has partnered with enterprise AI company Cohere to create a fully sovereign artificial intelligence platform for government and business customers. The collaboration addresses growing demand for advanced AI capabilities while ensuring Canadian data never leaves national borders.

The partnership centers on Bell’s AI Fabric ecosystem, which will now incorporate Cohere’s enterprise-grade language models and North agentic AI platform. This four-tier architecture spans everything from hardware infrastructure and Canada’s largest fibre network to professional services and AI applications, all protected by comprehensive cybersecurity measures.

For customers, the deal means access to turnkey AI solutions without the complexity of managing underlying infrastructure. Government agencies and enterprises can deploy AI agents and automation tools through Cohere’s North platform while maintaining strict data residency requirements within Canada.

Bell plans to implement North internally as well, enabling employees to build custom AI agents using the company’s proprietary data. This internal deployment will also enhance the managed services that Bell’s Ateko brand provides to enterprise clients.

The partnership reflects Canada’s push to establish sovereign AI capabilities that can compete globally while maintaining domestic control over sensitive data. With Bell serving as Cohere’s preferred Canadian infrastructure provider and Cohere becoming Bell’s primary AI solution partner, the collaboration creates a foundation for Canadian organizations to adopt cutting-edge AI without compromising security or data sovereignty.

Data Science Leaders Converge on Toronto for KDD-2025

The global data science community is preparing to descend on Toronto next week for KDD-2025, the premier conference where academic research meets real-world AI deployment. Running from August 3 to 7 at the Toronto Convention Centre, the event brings together researchers, tech leaders, and practitioners from companies such as Apple, Google DeepMind, Cohere, Amazon, and Cisco to tackle the evolving challenges of modern data science.

This year’s conference arrives at a pivotal moment for the field. The rapid advancement of generative AI has fundamentally altered how data scientists work, shifting focus from traditional structured data analysis to managing fluid, conversational AI systems that generate complex outputs in real-time.

The changing landscape has redefined the data scientist’s role. Rather than building models from scratch, professionals increasingly fine-tune large foundation models, raising questions about accessibility and control in an industry dominated by major tech companies. Meanwhile, AI tools are assuming decision-making responsibilities previously reserved for humans, creating new demands for professionals who can design safe and ethical systems.

KDD-2025’s program reflects these shifts through six specialized tracks covering finance, healthcare, responsible AI, Canadian AI developments, AI in science, and AI reasoning. The conference emphasizes practical deployment challenges, featuring case studies from retail companies that utilize generative AI for personalization and hospitals that implement predictive models for patient care.

A highlight of the week will be hands-on tutorials, including a highly anticipated session on evaluating AI agents in enterprise environments. Led by SAP researcher Dr. Reza Mohammadi, the tutorial addresses a critical gap in AI development: measuring whether autonomous systems actually accomplish their intended goals.

The conference also dedicates significant attention to governance and oversight, with panels featuring regulators, ethicists, and civil society leaders discussing responsible AI development. This focus on “innovation with accountability” underscores the industry’s growing recognition that technical advancement must be paired with careful consideration of societal impact.

For attendees, KDD-2025 offers access to what organizers call “decades of hard-won wisdom about working with actual data in messy reality” – exactly the kind of practical knowledge needed to navigate today’s rapidly evolving AI landscape.

⚙️ New Models & Tools

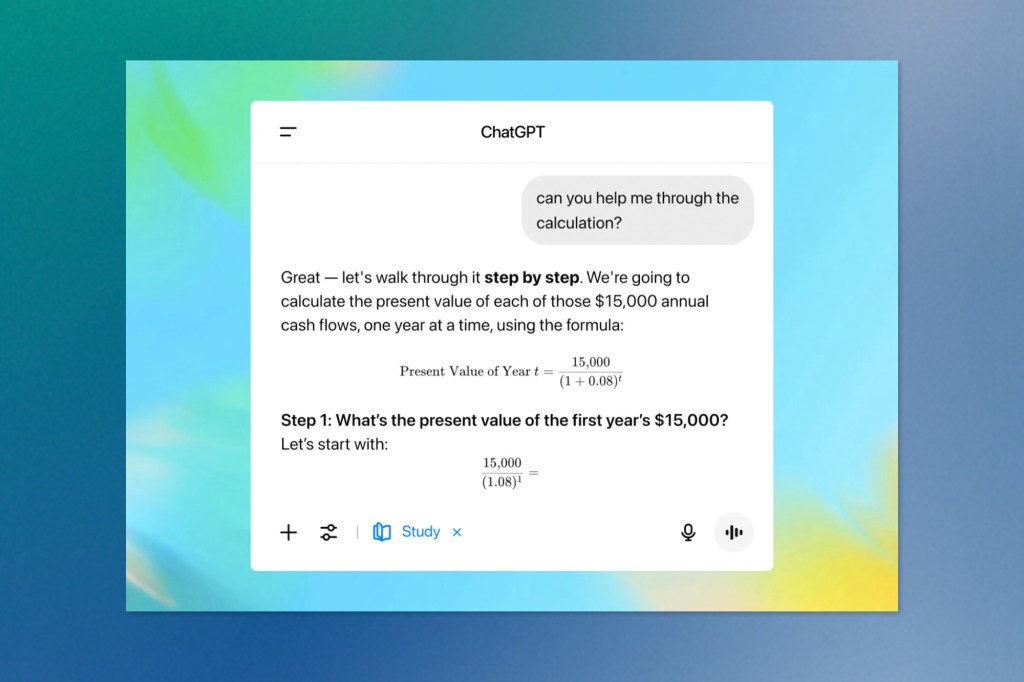

OpenAI Launches Study Mode for Educational AI Interactions

OpenAI has introduced Study Mode in ChatGPT, a new learning-focused interface designed to guide students through problems step-by-step rather than simply providing answers. The feature addresses growing concerns about AI’s role in education by emphasizing understanding over completion, utilizing pedagogical principles developed in collaboration with teachers and learning science experts.

Study Mode employs interactive prompts that combine Socratic questioning, hints, and self-reflection exercises to encourage active learning. Instead of delivering direct solutions, the system asks calibrating questions to assess skill level and provides scaffolded responses that break complex topics into manageable sections. The approach aims to develop deeper comprehension while managing cognitive load.

The feature includes personalized support that adapts to individual skill levels based on conversation history, along with knowledge checks through quizzes and open-ended questions. Students can toggle Study Mode on and off during conversations, providing flexibility to match different learning objectives within the same session.

OpenAI developed the feature with college students as the primary audience, incorporating feedback from early testing that compared the experience to having “24/7 office hours” with an always-available tutor. Students reported success in mastering challenging concepts like sinusoidal positional encodings and game theory through extended learning sessions.

The launch comes as educational institutions grapple with AI’s growing presence in academic settings. Study Mode represents an attempt to channel AI use toward genuine learning rather than shortcutting assignments, though OpenAI acknowledges this is just the first step in a longer development process.

Currently implemented through custom system instructions, OpenAI plans to integrate these behaviours directly into future models based on user feedback. The company is also exploring enhanced visualizations, goal setting across conversations, and deeper personalization features while conducting research through partnerships with Stanford’s SCALE Initiative to study AI’s impact on learning outcomes.

Study Mode is available to all ChatGPT users across Free, Plus, Pro, and Team tiers, with ChatGPT Edu access coming in the following weeks.

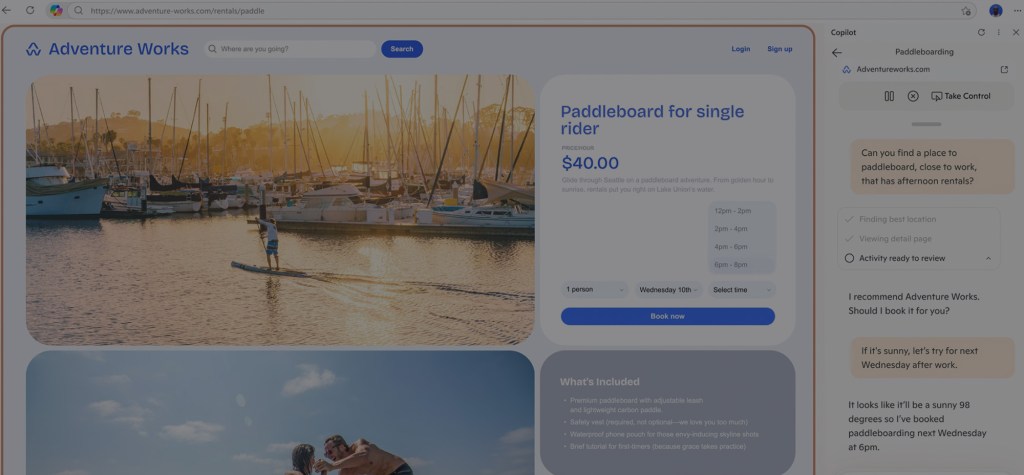

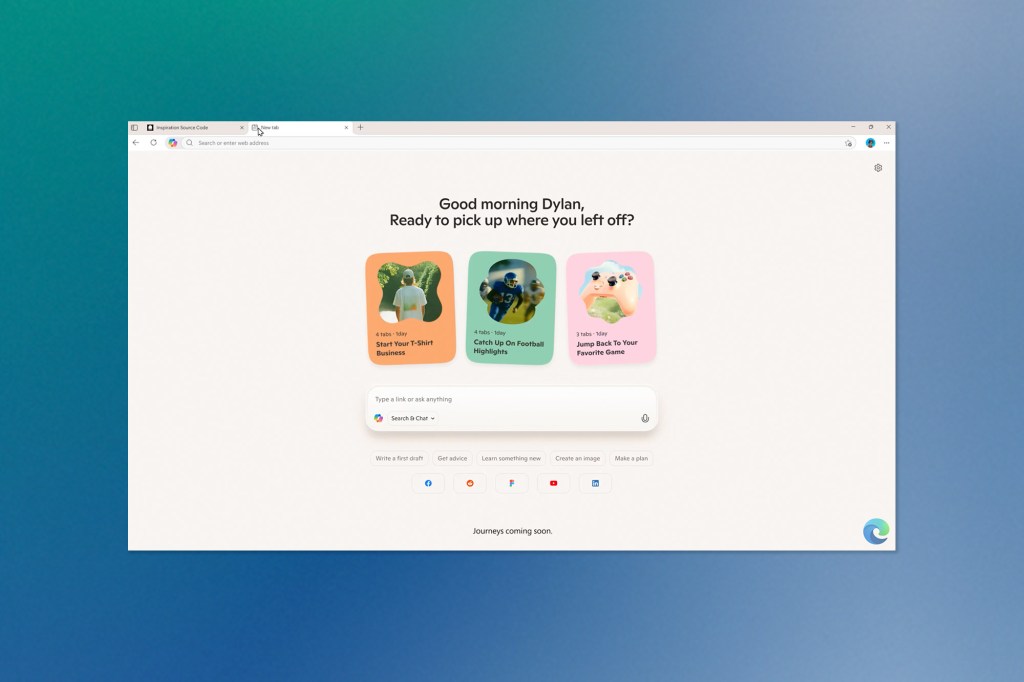

Microsoft Edge Tests New AI-Powered Copilot Mode

Microsoft has begun rolling out an experimental feature that enhances Edge’s browsing experience with intelligence. Copilot Mode integrates the company’s AI assistant directly into the browser, enabling it to analyze content across multiple open tabs and perform complex tasks on behalf of users.

The new functionality positions itself between Google’s limited Gemini integration in Chrome and the comprehensive AI overhaul offered by specialized browsers, such as Comet. Users can grant Copilot access to view all their open tabs, allowing the AI to compare hotel options, summarize product information across multiple pages, or analyze various purchasing decisions simultaneously.

Beyond tab analysis, Copilot Mode introduces voice navigation capabilities for finding information on websites and opening relevant tabs for comparison. Microsoft plans to expand the feature’s capabilities by allowing Copilot to access browser history and saved credentials, potentially enabling the AI to handle tasks like making restaurant reservations without direct user intervention.

The integration builds on Microsoft’s existing Copilot presence in Edge and leverages work from the Copilot Vision project. The AI assistant will appear in multiple locations throughout the browser, including the address bar and new tab page, creating what Microsoft describes as “topic-based journeys” that organize browsing activities.

Microsoft emphasizes that Copilot Mode remains entirely optional. Users can disable the feature through Edge settings and continue using the browser as before. The company frames this as an experimental offering that will evolve based on user feedback and usage patterns.

Currently available for free with certain usage limitations, Microsoft has signaled that Copilot Mode may eventually require a subscription. This approach aligns with the company’s broader strategy of monetizing advanced AI features while initially offering them at no cost to encourage adoption and gather user data.

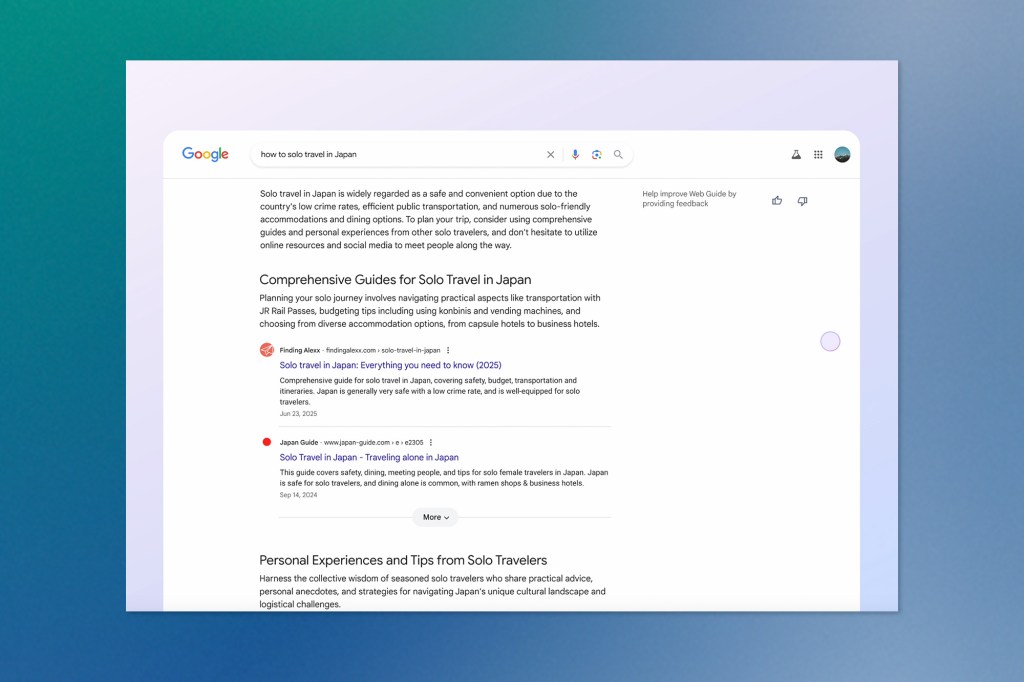

Google Introduces Web Guide for AI-Organized Search Results

Google has launched Web Guide, a new Search Labs experiment that uses artificial intelligence to restructure how search results are presented to users. The feature employs a customized version of Gemini to analyze both search queries and web content, then organizes results into logical groupings that address different aspects of a user’s question.

Rather than displaying traditional ranked lists, Web Guide clusters related web pages into categories that correspond to specific elements of the search query. This approach aims to help users discover relevant pages they might otherwise overlook in conventional search results. The system operates using a “query fan-out” technique, simultaneously executing multiple related searches to identify the most pertinent content.

The feature shows particular strength with open-ended queries and complex, multi-sentence searches. Examples include broad topics like planning solo travel to Japan or detailed requests about maintaining family relationships across time zones. By breaking down these complex queries into organized sections, Web Guide presents information in a more digestible format.

Initially, Web Guide is available exclusively to users who have opted into Search Labs experiments through the Web tab interface. Users can easily toggle between the AI-organized results and standard search presentation at any time. Google plans to gradually expand the feature’s presence across other parts of Search, including the main “All” results tab, as the company gathers feedback and identifies optimal use cases.

Plus, Expanded AI Features Across Photos and NotebookLM

Google’s experimentation with AI-organized search results through Web Guide represents just one piece of the company’s broader push to integrate artificial intelligence across its entire product ecosystem. This same week, Google rolled out significant AI-powered updates to two other major consumer applications. Google Photos now transforms still images into six-second animated videos using the company’s Veo 2 AI model, while NotebookLM gained video capabilities to complement its popular audio overviews.

The Photos update introduces a “Photo to Video” feature that brings still images to life with natural-looking motion. Users can choose between “subtle movements” that add gentle animation to people in photos, or an “I’m feeling lucky” option that might add elements like confetti or other dynamic effects. The feature leverages the same Veo 2 model used across YouTube and Gemini, positioning Google Photos as the first mainstream photo app to embed AI video generation.

Alongside the animation tool, Google is rolling out “Remix” in the coming weeks, which transforms existing photos into different artistic styles, including comic book panels, anime illustrations, 3D renderings, and pencil sketches. Both features will live in a new “Create” tab that serves as a hub for AI-powered photo tools, with all generated content carrying visible AI labels and invisible SynthID watermarks.

Meanwhile, NotebookLM received its own video upgrade with the launch of Video Overviews. These narrated slide presentations serve as visual alternatives to the platform’s popular Audio Overviews, incorporating images, diagrams, and data from uploaded documents to explain complex concepts. Users can customize Video Overviews by specifying focus topics, learning goals, and target audiences.

The NotebookLM update also includes a redesigned Studio panel that allows users to create multiple outputs of the same type within a single notebook. This enables scenarios like generating Audio Overviews in different languages for global accessibility or creating role-specific content for team collaboration.

Harmonic Launches AI Math Chatbot Claiming Zero Hallucinations

Harmonic, the AI startup co-founded by Robinhood CEO Vlad Tenev, has released a beta version of its mobile chatbot app featuring Aristotle, an AI model designed specifically for mathematical reasoning. The company makes the ambitious claim that Aristotle delivers completely accurate answers within its supported domains, addressing one of the most persistent challenges facing current AI systems.

The startup positions itself as pursuing “mathematical superintelligence,” with plans to eventually support all math-dependent fields, including physics, statistics, and computer science. According to CEO Tudor Achim, Aristotle represents the first publicly available AI system that both performs reasoning and formally verifies its outputs before presenting them to users.

Harmonic’s approach to ensuring accuracy involves having Aristotle generate responses using Lean, an open-source programming language designed for mathematical proofs. Before delivering answers, the system validates solutions through algorithmic verification processes that operate independently of AI, similar to verification methods used in critical applications like medical devices and aviation systems.

The company highlights Aristotle’s gold medal performance on the 2025 International Math Olympiad, though notably through formal testing where problems were converted to machine-readable formats. While Google and OpenAI also achieved gold medal results this year, their models were tested using natural language problems, representing a different evaluation approach.

The app launch follows Harmonic’s recent $100 million Series B funding round led by Kleiner Perkins, valuing the company at $875 million. The startup plans to expand access through enterprise APIs and consumer web applications, building on the current mobile beta release.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.