OpenAI released its biggest update yet this week with ChatGPT, a system that can independently handle complex tasks using its own virtual computer. The launch headlines a week packed with industry drama, from a spectacular $3 billion deal collapse that triggered a 72-hour feeding frenzy, to new development tools, browser wars, and platform integrations.

Listen to the AI-Powered Audio Recap

This AI-generated podcast is based on our editor team’s AI This Week posts. We use advanced tools like Google Notebook LM, Descript, and Elevenlabs to turn written insights into an engaging audio experience. While the process is AI-assisted, our team ensures each episode meets our quality standards. We’d love your feedback—let us know how we can make it even better.

OpenAI Launches ChatGPT Agent with Unified Computer Control

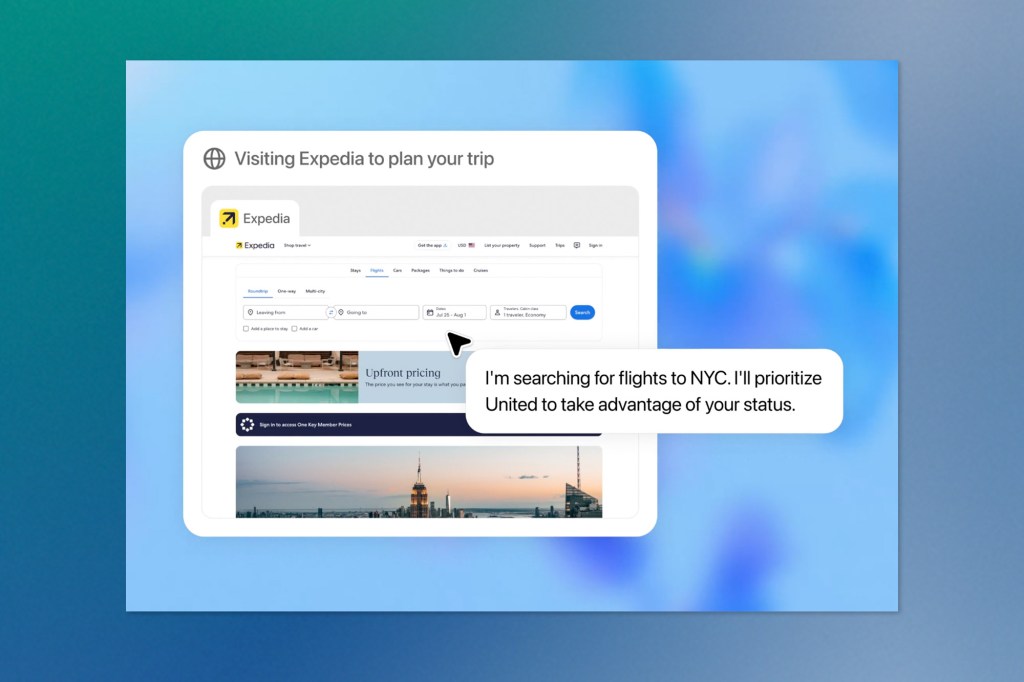

OpenAI has unveiled ChatGPT agent, a significant evolution that combines the capabilities of its previous Operator and deep research tools into a unified system capable of autonomous task completion. The new agent can handle complex, multi-step workflows by using its own virtual computer to navigate websites, analyze data, create presentations, and execute real-world tasks from start to finish.

The agent represents a major advancement in AI autonomy, equipped with multiple tools including visual and text-based browsers, terminal access, and direct API connections. Users can request tasks such as “find and book a conference room for next Tuesday’s client meeting, then send calendar invites” or “plan a weekend trip to Portland, book flights and hotels within my budget,” and the agent will intelligently select the appropriate tools and methods to complete each step. It can seamlessly transition between different interfaces, downloading files, manipulating data through command line operations, and presenting results in editable formats.

A key design principle emphasizes user control and collaboration. The agent requests permission before taking consequential actions and allows users to interrupt, redirect, or take over the browser at any point. Users receive real-time narration of the agent’s activities and can modify instructions mid-task without losing previous progress. For lengthy operations, the system sends mobile notifications upon completion.

The performance benchmarks demonstrate substantial improvements across multiple domains. On Humanity’s Last Exam, measuring expert-level knowledge across subjects, the ChatGPT agent achieved 41.6% accuracy in single attempts and 44.4% when running multiple parallel attempts. On FrontierMath, featuring complex mathematical problems that typically require hours or days for expert mathematicians to solve, the agent reached 27.4% accuracy with tool access.

Particularly impressive results emerged in practical business applications. On an internal benchmark evaluating economically valuable knowledge work tasks, the ChatGPT agent matched or exceeded human performance in roughly half the cases across various completion timeframes. The system significantly outperformed existing models on data science tasks, spreadsheet manipulation, and investment banking modelling exercises.

The expanded capabilities introduce new security considerations, particularly around prompt injection attacks, where malicious websites could attempt to manipulate the agent’s behaviour. OpenAI has implemented multiple safeguards, including training against adversarial prompts, requiring explicit confirmation for consequential actions, and providing secure browser takeover modes that don’t expose user credentials to the AI system.

Given the agent’s enhanced capabilities, OpenAI has classified it as “High Biological and Chemical capabilities” under their Preparedness Framework, implementing their most comprehensive safety measures to date. This includes specialized refusal training, continuous monitoring systems, and collaboration with external biosecurity experts.

The feature launches today for Pro, Plus, and Team subscribers, with Pro users receiving 400 monthly messages and other paid tiers getting 40 messages per month. The rollout will extend to Enterprise and Education users in the coming weeks, though availability in the European Economic Area and Switzerland remains pending.

OpenAI’s Busy Week Continues

Windsurf Acquisition Falls Through

OpenAI’s planned $3 billion acquisition of AI coding startup Windsurf collapsed this week, immediately followed by Google DeepMind swooping in to hire the startup’s key leadership in a $2.4 billion reverse-acquihire. The timing reveals strategic tensions within the AI ecosystem, as OpenAI’s deal reportedly became a friction point in its contract renegotiations with Microsoft. The exclusivity period on OpenAI’s offer expired Friday, clearing the way for Google to secure CEO Varun Mohan, co-founder Douglas Chen, and several top researchers. Cognition then quickly acquired the remaining Windsurf assets and team, combining its AI coding agent technology with Windsurf’s IDE capabilities.

Browser Development: “Aura” Project Advances

OpenAI continues developing its AI-powered web browser, internally codenamed “Aura.” Code traces discovered in ChatGPT reveal references including “is Aura” and “Aura Sidebar,” indicating active testing across desktop and mobile platforms. The Chromium-based browser will integrate OpenAI’s Operator AI agent for autonomous web tasks like reservation booking and form completion. Alongside browser development, OpenAI has enhanced ChatGPT’s image generation with preset visual styles, allowing users to select themes like Retro Cartoon or Anime without complex prompting.

Open Model Release Delayed Again

OpenAI has postponed its open-source model release indefinitely for the second time, with CEO Sam Altman citing ongoing safety evaluations. The model, originally planned for summer and later rescheduled for this week, would mark OpenAI’s first freely downloadable model in years. Altman emphasized that “once weights are out, they can’t be pulled back,” reflecting OpenAI’s cautious approach to open releases. The delay comes as competition intensifies, with Chinese startup Moonshot AI recently launching Kimi K2, a trillion-parameter model that outperforms GPT-4.1 on multiple benchmarks.

Google Launches GenAI Processors for Streamlined Gemini Development

Google DeepMind unveiled GenAI Processors, a new open-source Python library designed to simplify the development of complex AI applications using Gemini models. The library addresses a familiar challenge that developers face when building sophisticated LLM applications: managing the intricate web of data processing steps, asynchronous API calls, and custom logic that can quickly become unwieldy.

The core innovation lies in GenAI Processors’ stream-based architecture, which treats all input and output as asynchronous streams of standardized data parts called ProcessorParts. This approach enables seamless chaining of operations, from basic data manipulation to high-level model interactions, while maintaining consistency across different data types, including text, images, audio, and JSON.

A key strength of the library is its ability to optimize concurrent execution automatically. The system can process multiple parts simultaneously when their dependencies are satisfied, significantly reducing time to first token and improving overall responsiveness. This optimization happens transparently, allowing developers to focus on application logic rather than complex concurrency management.

The library shines particularly in real-time applications. Google demonstrated how developers can build a “Live Agent” capable of processing audio and video streams in real-time using the Gemini Live API with just a few lines of code. The modular design enables the easy composition of different components, combining camera streams, audio input, model processing, and output handling through simple operators.

GenAI Processors integrates directly with the Gemini API through dedicated processors like GenaiModel for turn-based interactions and LiveProcessor for real-time streaming. This tight integration reduces boilerplate code and accelerates development while handling the complexities of the Live API automatically.

The library’s extensible design allows developers to create custom processors through inheritance or decorators, making it easy to integrate external APIs or specialized operations into existing pipelines. Google has provided comprehensive documentation through Colab notebooks and practical examples, including research agents and live commentary applications.

Currently available only in Python, GenAI Processors represents Google’s effort to democratize the development of complex AI applications and encourage community contributions to expand the library’s capabilities.

Anthropic Integrates Claude with Canva for AI-Powered Design Creation

Anthropic has launched a new integration allowing Claude users to create, edit, and manage Canva designs through natural language prompts, marking another expansion of the AI assistant’s third-party tool capabilities. The feature enables users to complete design tasks like creating presentations, resizing images, and filling templates directly from their Claude conversations without switching between applications.

The integration leverages Canva’s Model Context Protocol server, launched last month, which provides Claude with secure access to the user’s Canva content. Users can search through Canva Docs, Presentations, and brand templates using keywords, then have Claude summarize the content through the AI interface. The functionality requires both a paid Canva account, starting at $15 per month, and a Claude subscription, at $17 per month.

This connection utilizes the Model Context Protocol, an open-source standard often described as the “USB-C port of AI” that enables rapid integration between AI models and external applications. Major companies, including Microsoft, Figma, and Canva, have adopted MCP as they prepare for an AI-agent-driven technology landscape.

Canva’s Ecosystem Head, Anwar Haneef, emphasized the streamlined workflow benefits, noting that users can now “generate, summarize, review, and publish Canva designs, all within a Claude chat,” through a simple settings toggle. This represents what the company sees as a significant shift toward AI-first workflows that merge creativity and productivity.

Claude becomes the first AI assistant to support Canva design workflows through MCP, though the chatbot already offers design capabilities through a similar partnership with Figma announced last month. Anthropic is also launching a new Claude integrations directory on web and desktop platforms today, providing users with a comprehensive view of available tools and connected applications.

The integration joins Claude’s growing ecosystem of third-party connections, which includes tools like Notion, Stripe, and Prisma, positioning the AI assistant as a central hub for managing various digital workflows through conversational interfaces.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.