This week upended expectations as tech titans and bold startups pushed AI into unexpected territory. Apple tapped Anthropic’s Claude for Xcode coding assistance, while Google unveiled an enhanced Gemini model just before their developer conference. Meanwhile, Anthropic freed Claude with cross-platform integrations, NotebookLM broke free from desktop constraints, and Particle reimagined how AI can enhance rather than replace news publishers. Adding to this wave of innovation, Netflix launched a conversational AI search tool powered by OpenAI’s technology, transforming how viewers discover content through natural language requests.

Listen to AI Knowledge Stream

AI Knowledge Stream is an AI-generated podcast based on our editor team’s “AI This Week” posts. We leverage cutting-edge AI tools, including Google Notebook LM, Descript, and Elevenlabs, to transform written content into an engaging audio experience. While AI-powered, our team oversees the process to ensure quality and accuracy. We value your feedback! Let us know how we can improve to better meet your needs.

Apple and Anthropic Join Forces on Revolutionary AI Coding Assistant for Xcode

Apple is partnering with Anthropic to create an advanced AI-powered coding assistant integrated directly into Xcode, Apple’s primary development environment. This collaboration aims to enhance programmer productivity by leveraging Anthropic’s Claude Sonnet model to help write, edit, and test code on behalf of developers.

The tool is currently in an internal rollout phase at Apple, with the company testing its capabilities before making any decisions about a potential public release. This cautious approach aligns with Apple’s traditional strategy of thoroughly vetting new technologies before wider deployment.

Developers using this new version of Xcode will benefit from features including a chat interface for entering requests, tools for testing user interfaces, and assistance with bug management.

This initiative comes as other tech giants increasingly integrate AI into their development processes. Microsoft’s CEO, Satya Nadella, has stated that software now writes approximately “20 to 30 percent of the code” in some of their project repositories. Meanwhile, OpenAI is reportedly considering a $3 billion acquisition of Windsurf, an AI-powered coding tool that could enhance ChatGPT’s programming capabilities.

Apple had previously announced “Swift Assist,” another AI-powered coding tool, at WWDC 2024, though it has not yet been released. This new collaboration with Anthropic may represent a more ambitious approach to AI-assisted development.

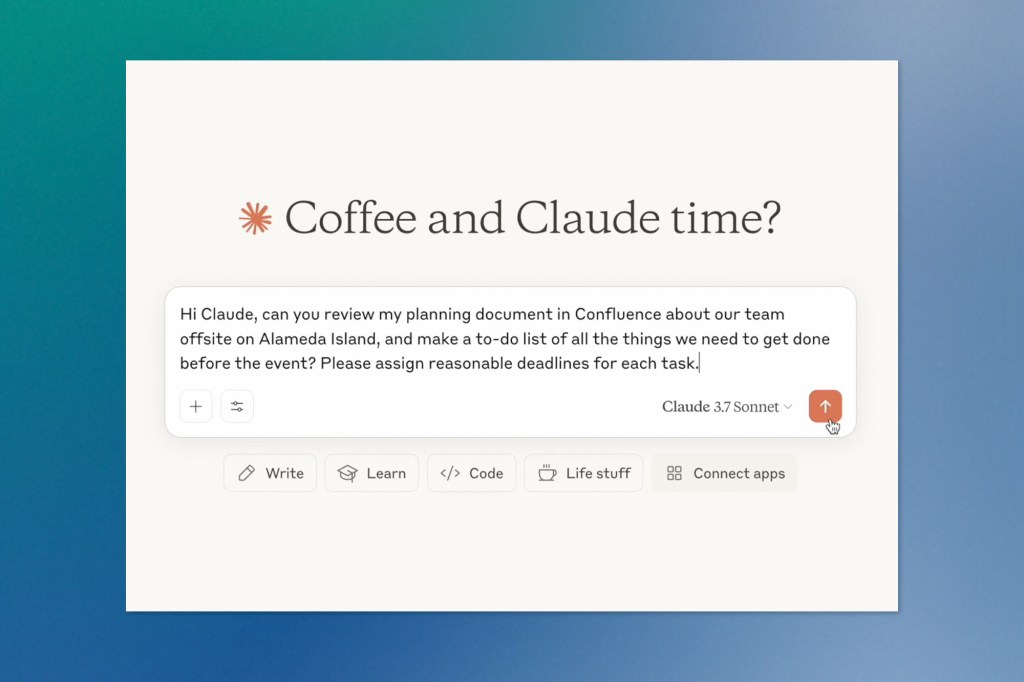

Anthropic Expands Claude’s Capabilities with New Integrations and Advanced Research Features

Anthropic has unveiled a significant expansion to Claude’s capabilities with the introduction of Integrations. This new system connects the AI assistant to various apps and tools across users’ digital ecosystems. Announced on May 1, 2025, this update builds on the Model Context Protocol (MCP) that was launched in November of last year.

The new Integrations feature takes MCP beyond its previous limitations, where it was only available through Claude Desktop via local servers. Claude can now connect seamlessly with remote MCP servers across web and desktop applications. This advancement allows developers to build and host servers that enhance Claude’s capabilities, while users can discover and connect various tools directly to Claude.

At launch, users can choose from Integrations for 10 popular services, including Atlassian’s Jira and Confluence, Zapier, Cloudflare, Intercom, Asana, Square, Sentry, PayPal, Linear, and Plaid. Additional integrations from companies like Stripe and GitLab are expected to follow soon. Anthropic claims developers interested in creating their own integrations can accomplish this in as little as 30 minutes using documentation or solutions like Cloudflare that provide built-in authentication and deployment options.

Each integration substantially expands Claude’s functionality. For example, the Zapier integration connects thousands of apps through pre-built workflows, allowing Claude to access these and custom workflows through conversation. This enables capabilities like automatically pulling sales data from HubSpot and preparing meeting briefs based on calendar events.

Alongside Integrations, Anthropic has enhanced Claude’s Research capabilities with a new advanced mode, allowing Claude to conduct deeper investigations across hundreds of internal and external sources, delivering comprehensive reports that can take five to 45 minutes to complete. The system breaks down complex requests into smaller components, investigating each thoroughly before compiling a final report—work that would typically require hours of manual research.

Claude’s data access has also been expanded. Initially launched with support for web search and Google Workspace, Research can now incorporate information from any connected Integration. To maintain transparency, Claude provides clear citations that link directly to original sources, ensuring users can verify where each piece of information originated.

These new features—Integrations and advanced Research—are currently available in beta for users on Max, Team, and Enterprise plans, with Pro plan availability coming soon. Additionally, web search functionality is now globally available to all Claude.ai paid plans.

Netflix Launches AI-Powered Conversational Search Experience

Netflix has officially unveiled its new generative AI-powered search tool. The feature leverages OpenAI’s ChatGPT technology to create a more intuitive and conversational discovery experience for subscribers.

The new search functionality allows users to find content using natural language queries rather than traditional keyword searches. Subscribers can express preferences through conversational prompts like “Show me something with time travel that isn’t too intense” or make more nuanced requests such as “I need a movie that my teenager and grandparents would both enjoy with minimal awkward scenes.” This approach aims to make content discovery more intuitive and personalized.

Netflix is rolling out this feature gradually, beginning with an opt-in beta for iOS users this week. Some subscribers in Australia and New Zealand have already had early access to the tool during a limited test phase.

The streaming giant isn’t alone in exploring AI-powered search capabilities. Amazon has implemented a similar voice search feature for Fire TV devices, while Tubi previously experimented with a ChatGPT-powered search tool before eventually discontinuing it. Netflix’s implementation will face the challenge of maintaining user engagement where competitors have struggled.

Beyond search, Netflix also revealed plans to utilize generative AI for localizing content presentation, specifically by updating title cards to appear in subscribers’ preferred languages. This represents part of a broader strategy to enhance personalization across the platform’s user experience.

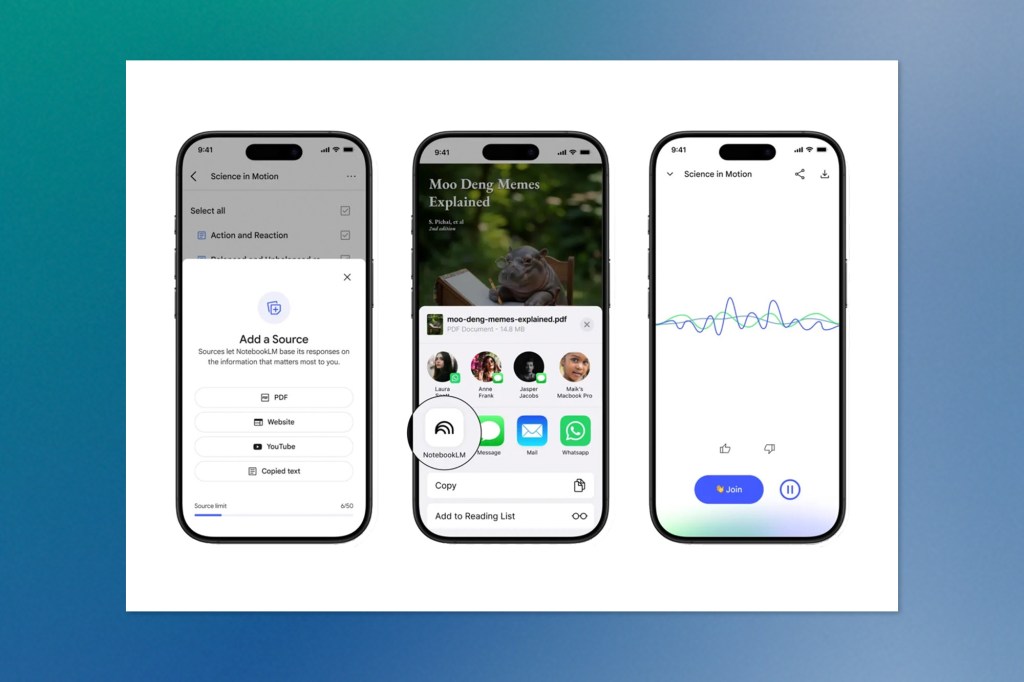

Google’s NotebookLM Expands to Mobile with New iOS and Android Apps

Google is bringing its powerful AI-based research assistant, NotebookLM, to mobile devices with dedicated apps launching on May 20, 2025. Previously available only on desktops since its introduction in 2023, the service will now be accessible on smartphones and tablets through iOS and Android applications, currently available for preorder.

NotebookLM has established itself as a valuable tool for students, professionals, and researchers who need to process and understand complex information. The platform offers features like intelligent summaries and the ability to query documents about their contents, making extracting insights from various materials easier. One of its standout capabilities is the Audio Overview feature, which transforms research materials into AI-generated podcasts for more convenient consumption of complex topics.

According to the app store listings, the mobile versions will maintain core functionality from the desktop experience. Users will be able to create new notebooks, access existing ones, and upload source materials directly from their devices. The mobile apps will also support the Audio Overview feature, allowing users to listen to their generated content while on the move. The tablet versions for iPads and Android tablets will offer an expanded interface that takes advantage of the larger screen real estate for improved multitasking.

The timing of the May 20 release coincides with the first day of Google I/O, suggesting that Google will likely share additional details about NotebookLM’s mobile expansion during its annual developer conference. Users interested in getting immediate access can pre-order the app on Apple’s App Store or pre-register on Google Play to automatically download it to their devices on the release date.

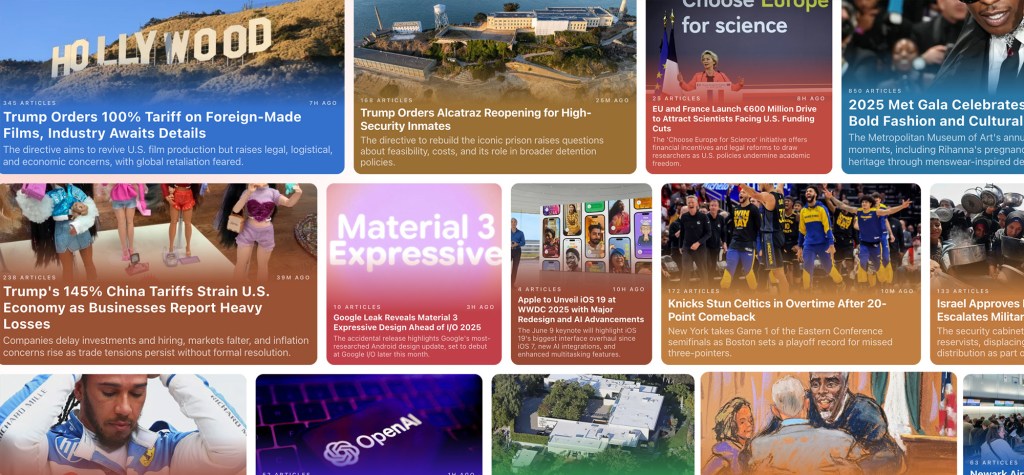

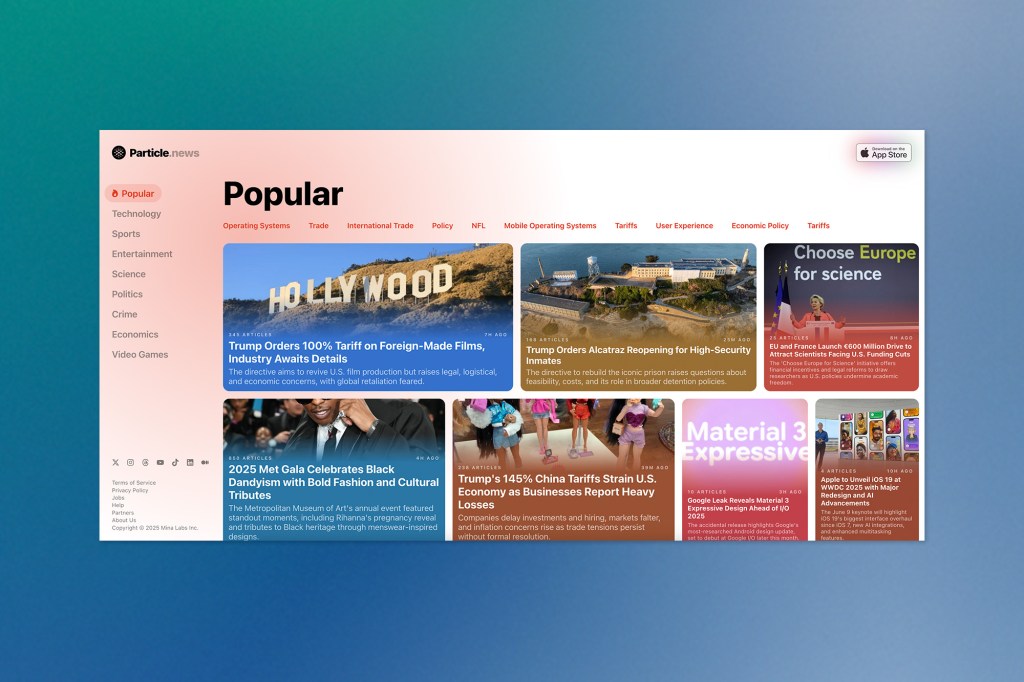

Particle Expands Its AI News Reader to Web Platform

Particle, a company focused on developing AI technology for news consumption that supports rather than undermines publishers, has expanded its offering with a new web platform.

Launched on May 6, 2025, Particle.news brings the startup’s innovative approach to news aggregation to browser-based users alongside its existing mobile application. The new website organizes news into various categories, including Technology, Sports, Entertainment, Politics, Science, Crime, Economics, and Video Games, while also featuring trending stories on its homepage.

Particle’s approach to AI-enhanced news reading goes beyond simple summarization. The platform extracts important quotes from articles and allows readers to browse questions and answers from other users about the stories, though direct AI interaction isn’t yet available on the web version. One of the platform’s distinctive features is its “entity pages,” which provide background information on people, products, or organizations mentioned in articles. These pages compile basic Wikipedia-sourced details alongside links to related stories.

The service prominently displays links to original news sources alongside its AI summaries, directing traffic back to publishers. This strategy has proven effective enough that Particle has established partnerships with news outlets, including Reuters, Fortune, and AFP, to highlight their content.

Google Rolls Out Enhanced Gemini 2.5 Pro Model Ahead of Annual I/O Event

Google has unveiled an updated version of its flagship AI model with the release of Gemini 2.5 Pro Preview (I/O edition). This enhanced model arrives just before Google’s annual I/O developer conference, suggesting that the company is setting the stage for broader AI announcements at the upcoming event.

The new model maintains its predecessor’s pricing structure while offering improved capabilities across several domains. It’s now available through multiple access points, including the Gemini API, Google’s Vertex AI platform, AI Studio, and both web and mobile versions of the Gemini chatbot application.

According to Google, this updated version delivers significant improvements, specifically in coding and web application development. The model demonstrates enhanced abilities in code transformation—the process of modifying existing code to achieve specific goals—and general code editing tasks. These improvements appear to be validated by the model’s performance on the WebDev Arena Leaderboard, a benchmark that evaluates AI systems’ capabilities in creating functional and visually appealing web applications.

Beyond coding enhancements, the model also showcases advanced video understanding capabilities. Google reports that Gemini 2.5 Pro Preview (I/O edition) achieved an impressive 84.8% score on the VideoMME benchmark, placing it among the leading systems in this increasingly important domain.

For developers already using previous versions of Gemini, Google highlights that the new release addresses specific feedback points, including reducing errors in function calling and improving function calling trigger rates. The company also notes that the model has “a real taste for aesthetic web development while maintaining its steerability,” suggesting improvements in generating visually pleasing code while remaining responsive to user guidance.

Google I/O 2025: What to Know

Google’s annual developer conference, Google I/O 2025, is scheduled for May 20-21, 2025. The event is entirely virtual, making it accessible to participants worldwide through livestreamed keynotes and sessions, followed by on-demand technical content.

The digital format allows Google to reach a global audience regardless of location, with content structured to accommodate different time zones. In addition to the main event programming, Google is encouraging attendees to connect with their local developer communities for regional I/O-related activities.

With the recent release of the Gemini 2.5 Pro Preview (I/O edition) model just two weeks before the conference, AI developments are expected to feature prominently among the announcements at this year’s event.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.