Tech giants recalibrated their strategies with unexpected releases and pivots these past days. Meta’s Llama 4 models emerged with MoE architecture, while Midjourney finally answered competitors with its personalized V7 image generator. OpenAI reversed course on o3, delaying GPT-5 to perfect its integration of reasoning capabilities. GitHub’s Copilot’s new premium request system signals the rising costs of advanced AI, while both Microsoft’s Copilot and Google’s Gemini Code Assist gained significant agentic capabilities. Behind these parallel moves lies an industry wrestling with technical limitations, regulatory pressures, and the search for sustainable business models as AI capabilities grow increasingly sophisticated.

Listen to AI Knowledge Stream

AI Knowledge Stream is an AI-generated podcast based on our editor team’s “AI This Week” posts. We leverage cutting-edge AI tools, including Google Notebook LM, Descript, and Elevenlabs, to transform written content into an engaging audio experience. While AI-powered, our team oversees the process to ensure quality and accuracy. We value your feedback! Let us know how we can improve to meet your needs better.

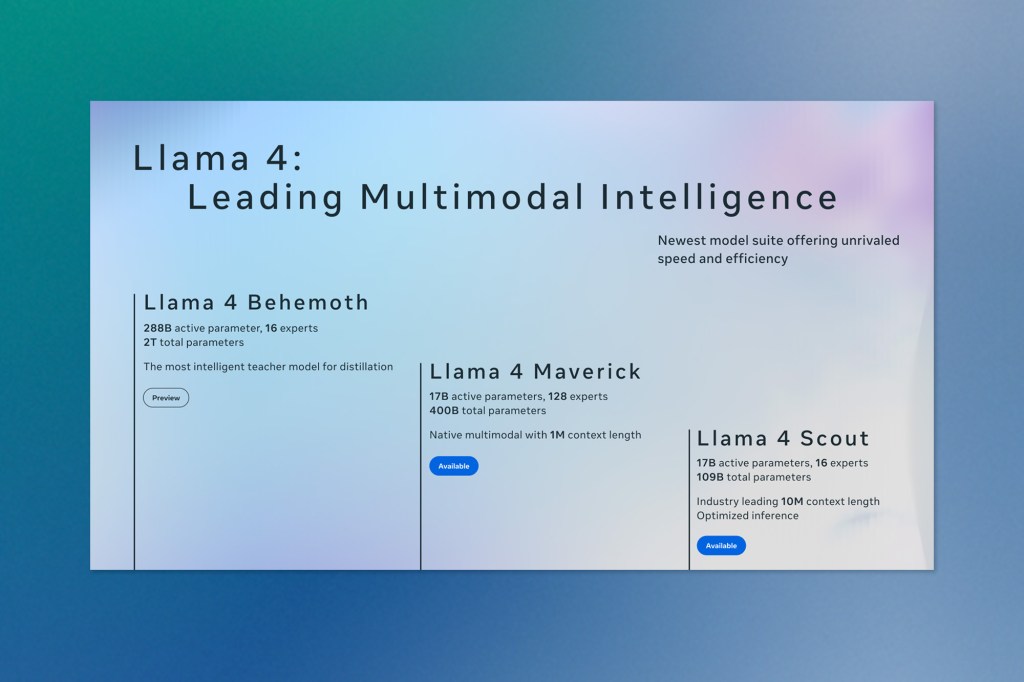

Meta Releases New Llama 4 Model Collection

Meta has just released its latest AI model collection, Llama 4, introducing three new models: Scout, Maverick, and Behemoth.

The Llama 4 Family

Scout represents Meta’s entry-level model in the new collection, featuring 17 billion active parameters across 16 experts with 109 billion total parameters. Its standout feature is an impressive 10 million token context window, allowing it to process extremely lengthy documents and images. This makes it particularly effective for document summarization and reasoning over large codebases. Hardware-wise, Scout is relatively accessible and capable of running on a single Nvidia H100 GPU.

Maverick sits in the middle of the lineup with 17 billion active parameters distributed across 128 “experts” for a total of 400 billion parameters. Meta positions this model as ideal for general assistant and chat applications, including creative writing. According to Meta’s internal testing, Maverick outperforms models like OpenAI’s GPT-4o and Google’s Gemini 2.0 on certain benchmarks related to coding, reasoning, multilingual capabilities, long-context processing, and image understanding. However, it doesn’t quite reach the capabilities of more advanced models like Google’s Gemini 2.5 Pro, Anthropic’s Claude 3.7 Sonnet, or OpenAI’s GPT-4.5. Maverick requires more substantial computing resources, needing an Nvidia H100 DGX system or equivalent.

Behemoth, still in training and unreleased, represents Meta’s most powerful offering with 288 billion active parameters across 16 experts, reaching nearly two trillion total parameters in total. Meta claims internal benchmarks show Behemoth outperforming GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro (though not Gemini 2.5 Pro) on evaluations measuring STEM capabilities, particularly math problem-solving.

Technical Architecture

The Llama 4 collection marks Meta’s first implementation of a Mixture of Experts (MoE) architecture, offering greater computational efficiency during training and inference. This approach breaks down data processing tasks into subtasks and delegates them to smaller, specialized “expert” models. This architecture explains why the models have both “active” and “total” parameter counts – not all parameters are engaged simultaneously during operation.

Availability and Access

Scout and Maverick are available on Llama.com and through Meta’s partners, including the AI development platform Hugging Face. Meta AI, the company’s assistant across WhatsApp, Messenger, and Instagram, has been updated to use Llama 4 in 40 countries, though multimodal features remain limited to English in the US market. Behemoth remains in training and is not yet available.

The licensing terms include notable restrictions: users and companies based in the EU are prohibited from using or distributing the models, likely due to the region’s AI and data privacy regulations. Additionally, companies with more than 700 million monthly active users must request special permission from Meta to use the models.

Moderation and Content Policies

Meta has tuned the Llama 4 models to refuse to answer “contentious” questions less frequently than previous versions. According to Meta, these models will respond to “debated” political and social topics that earlier Llama models would have declined to address. The company claims Llama 4 is “dramatically more balanced” in determining which prompts to engage with, aiming to provide “helpful, factual responses without judgment” across different viewpoints.

This moderation approach comes amid ongoing discussions about AI chatbot biases, with some of President Trump’s allies, including Elon Musk and David Sacks, alleging that popular AI systems censor conservative perspectives.

Midjourney Launches V7 After Nearly Year-Long Wait

Midjourney has finally released V7, its first new AI image generation model in almost a year. The launch comes at an interesting time, just a few weeks after OpenAI introduced a viral new image generator in ChatGPT that gained significant attention for its Studio Ghibli-style creations.

V7’s New Architecture and Capabilities

According to Midjourney CEO David Holz, V7 represents a “totally different architecture” from previous versions. The new model demonstrates improved text prompt understanding, enhanced image prompt processing, and noticeably higher image quality with more beautiful textures. Perhaps most importantly, V7 shows significant improvements in generating coherent bodies, hands, and objects—areas that have historically been challenging for AI image generators.

Personalization and User Experience

V7 is Midjourney’s first model to enable personalization by default, requiring users to rate approximately 200 images to build a personalization profile before using the model. This profile helps tune the system to individual visual preferences, creating a more customized generation experience.

The model comes in two variants—Turbo (more expensive but faster) and Relax (standard pricing)—and introduces a new “Draft Mode” feature that renders images 10 times faster at half the cost of standard generation. While these draft images are lower quality, they can be enhanced and re-rendered with a single click, streamlining the creation workflow.

Current Limitations

Not all standard Midjourney features are available for V7 yet. According to Holz, capabilities like image upscaling and retexturing will be added in the coming months. The CEO also noted that V7 may require different prompting approaches compared to previous versions, encouraging users to experiment with various styles to achieve optimal results.

OpenAI Revises Release Strategy: o3 Back on Track, GPT-5 Delayed

OpenAI has made a significant reversal in its product roadmap. After seemingly cancelling the consumer release of its o3 reasoning model in February, the company now plans to launch both o3 and a next-generation successor, the o4-mini, within “a couple of weeks,” according to CEO Sam Altman.

The Reasoning Behind the Change

Altman explained on X that this shift in strategy relates directly to OpenAI’s upcoming GPT-5 model. The company has discovered that while GPT-5 will be “much better than originally thought,” they’re finding it “harder than expected to integrate everything smoothly” and need to ensure sufficient capacity for what they anticipate will be “unprecedented demand.” As a result, GPT-5’s release has been pushed back by “a few months” from its original timeline.

GPT-5’s Tiered Access Structure

Based on OpenAI’s published information, GPT-5 will feature a tiered access structure:

– Standard users will have unlimited chat access to GPT-5 at a “standard intelligence setting” (subject to “abuse thresholds”)

– ChatGPT Plus subscribers will access GPT-5 at a “higher level of intelligence”

– ChatGPT Pro customers will have access to GPT-5 at an “even higher level of intelligence”

Altman has previously noted that GPT-5 “will incorporate voice, Canvas, search, deep research, and more,” with a primary goal of unifying OpenAI’s various models into systems that can leverage all their tools, determine when extended reasoning is necessary, and prove useful across diverse tasks.

Google Enhances Gemini Code Assist with New Agentic Capabilities

Google has upgraded its Gemini Code Assist with “agentic” capabilities, announced during this week’s Cloud Next conference. The AI coding assistant can now deploy specialized agents that tackle complex programming tasks through multiple steps.

Advanced AI Agents for Complex Programming Tasks

The most significant advancement is Gemini Code Assist’s ability to create complete applications from product specifications written in Google Docs and transform code from one programming language to another. These agents, manageable through a new Kanban board interface, generate work plans and provide detailed progress updates as they complete tasks.

Beyond application creation and code transformation, the agents can implement new features, conduct code reviews, generate unit tests, and produce documentation. Google has also expanded Code Assist’s availability to Android Studio, enhancing its accessibility for mobile developers.

For developers using Code Assist’s new agentic features, manual code review remains essential to ensure security and functionality, regardless of how sophisticated the AI assistance has become.

GitHub Copilot Introduces Premium Request System and New Pricing Tier

GitHub has announced significant changes to its AI coding assistant, GitHub Copilot, implementing a new “premium requests” system that will effectively increase costs for users who need access to more advanced AI models.

New Rate Limits and Premium Requests

While GitHub Copilot subscribers will continue to have unlimited access to the base model (OpenAI’s GPT-4o), the company is now imposing rate limits when users want to use more advanced AI models for specialized tasks like “agentic” coding and multi-file edits. These advanced models, including Anthropic’s Claude 3.7 Sonnet, will now operate under a “premium requests” quota system.

Tiered Premium Request Allocation

GitHub has outlined a tiered structure for premium request allocations:

– Copilot Pro ($20/month) subscribers: 300 monthly premium requests beginning May 5

– Copilot Business users: 300 monthly premium requests starting between May 12-19

– Copilot Enterprise users: 1,000 monthly premium requests starting between May 12-19

Additional Options and Upgrade Path

For users who require more premium requests, GitHub is offering two options:

- Purchase additional premium requests at $0.04 per request

- Upgrade to the new Copilot Pro+ plan at $39 per month, which includes 1,500 premium requests and “access to the best models,” including OpenAI’s GPT-4.5

Despite these increased costs, GitHub Copilot remains highly profitable for Microsoft. CEO Satya Nadella noted last August that Copilot accounted for over 40% of GitHub’s revenue growth in 2024 and has already grown into a more significant business than all of GitHub when Microsoft acquired the platform approximately seven years ago.

Microsoft Copilot Gets Major Upgrade with Web Actions, Memory, and Visual Understanding

Microsoft has announced significant enhancements to its AI-powered Copilot chatbot, coinciding with the company’s 50th birthday. These new capabilities represent a substantial expansion of Copilot’s functionality as Microsoft prepares to integrate more of its own in-house technology into the service.

Web Browsing and Task Automation

Copilot can now take action on “most websites,” enabling it to perform tasks like booking tickets and making restaurant reservations. Microsoft has partnered with several major platforms for initial compatibility, including 1-800-Flowers.com, Booking.com, Expedia, Kayak, OpenTable, Priceline, Tripadvisor, Skyscanner, Viator, and Vrbo. This functionality mirrors the “agentic” capabilities in tools like OpenAI’s Operator, allowing users to delegate online tasks with simple prompts.

The upgraded Copilot can also track online deals, notify users of price drops and sales on specific items, and provide direct purchase links. However, Microsoft has provided limited details on how these capabilities work, including potential limitations or scenarios requiring human intervention. There may also be questions about whether websites can block Copilot’s automated actions, similar to how some sites restrict OpenAI’s Operator.

Visual Understanding and Device Integration

Copilot has gained significant visual capabilities, allowing it to analyze real-time video from phone cameras or photo galleries to answer questions about what it “sees.” The updated Copilot app can view desktop screens on Windows to help search, change settings, organize files, and perform other system operations. This feature will initially roll out to Windows Insider program members.

Content Creation and Research Tools

The update introduces several new tools for content creation and research:

– A “Podcasts” feature that generates conversational dialogue between synthetic hosts about websites, studies, or other sources

– “Pages” functionality (similar to ChatGPT Canvas and Claude Artifacts) for organizing notes and research into documents

– “Deep Research” capabilities for finding, analyzing, and combining information from various sources to address complex queries

Enhanced Memory and Personalization

Copilot now has improved memory capabilities, allowing it to remember user preferences such as favourite foods and films. Microsoft says this will enable the bot to offer more tailored solutions, proactive suggestions, and reminders. For privacy-conscious users, Microsoft provides options to delete individual “memories” or opt-out entirely through the user dashboard.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.