As we approach the end of the year, AI isn’t showing any signs of slowing down, and this week’s updates prove it. Google’s NotebookLM is levelling up with enterprise-focused features, DeepMind is raising the bar in video generation with Veo 2, and xAI is making its Grok chatbot faster and smarter. Not to be outdone, Meta’s giving its Ray-Ban smart glasses some impressive AI-powered upgrades. Let’s break down the highlights and what they mean for the future of AI.

Google Expands NotebookLM with Enterprise Features and More

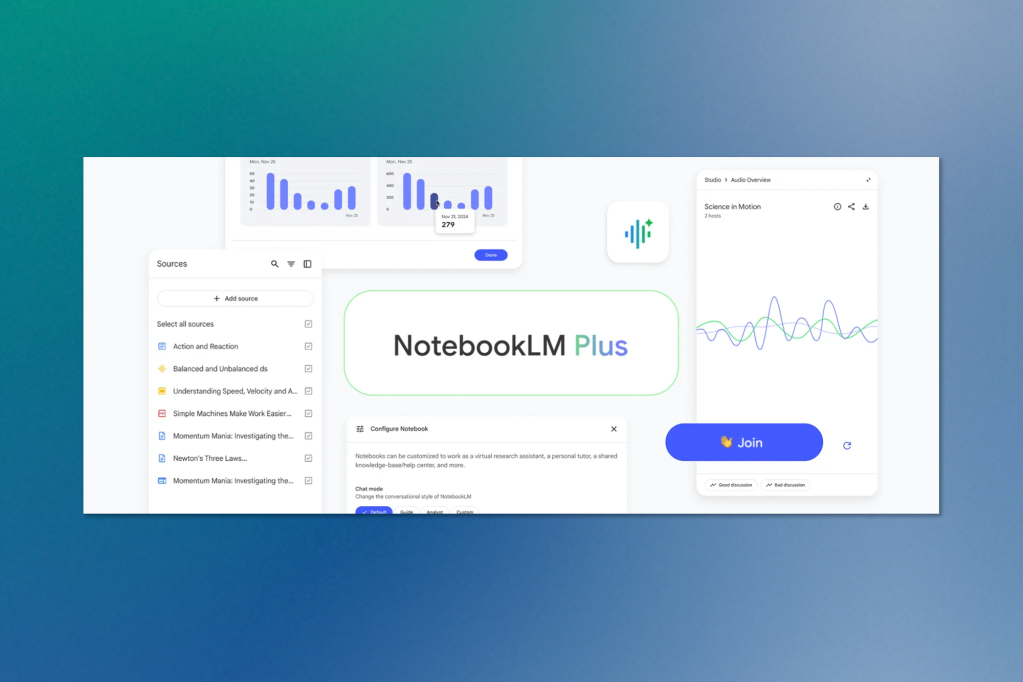

Google has unveiled NotebookLM Plus, a new enterprise-focused version of its popular AI note-taking and research app, NotebookLM. Designed for businesses, schools, and organizations, NotebookLM Plus introduces advanced tools for collaboration, enhanced security, and seamless integration with enterprise data systems.

Key Features of NotebookLM Plus

NotebookLM Plus builds on the consumer version with added benefits tailored for professional environments:

– Enhanced Collaboration: Shared team notebooks with usage analytics and customizable AI-generated responses.

– Greater Capacity: Five times the limit for notebooks, audio summaries, and data sources per notebook.

– Agentspace Integration: Part of Google Cloud’s Agentspace, where users can connect NotebookLM with other AI-powered tools for tasks like document analysis, data integration, and email processing.

Users can also access NotebookLM Plus independently via Google Workspace’s Gemini plan or purchase it separately through Google Cloud. For individual users, it will be available next year as part of Google’s Google One AI Premium subscription.

New Features and Experiments

NotebookLM isn’t stopping with enterprise features. Google has revamped the app, organizing tools into three streamlined panels:

– Sources Panel: Manage imported data.

– Chat Panel: Discuss and query data through a conversational interface.

– Studio Panel: Easily create study guides, briefing documents, and audio summaries.

One standout experimental feature, Interactive Mode, allows users to “join” AI-generated podcast-like conversations, asking questions and receiving personalized answers mid-discussion. While this feature is still in beta and may occasionally falter, it offers an exciting step forward for interactive AI capabilities.

Google Introduces Project Mariner and a New Wave of AI Agents

Google’s DeepMind division has revealed Project Mariner, its innovative AI agent that navigates the web on your behalf. Powered by Google’s Gemini AI, this prototype acts as a virtual assistant, performing tasks like shopping, finding flights, and exploring recipes by directly interacting with websites—just as a human would.

A New UX Paradigm Shift

Project Mariner marks a shift in how users interact with the web. Instead of directly browsing, users can rely on AI to handle tasks such as creating shopping carts or searching for household items. While limited to a single active Chrome tab and unable to perform sensitive actions like checking out or accepting cookies, Mariner allows users to oversee every step of the process, reinforcing trust and transparency.

DeepMind CTO Koray Kavukcuoglu explains, “Because Gemini is now taking actions on a user’s behalf, it’s important to take this step-by-step. It’s complementary—you and your agent can now do everything you do on a website.”

Beyond Project Mariner: Google’s New AI Agents

Google didn’t stop with Mariner. The company also introduced specialized AI agents for different tasks:

– Deep Research: Designed to tackle complex topics, this AI creates multistep research plans and generates detailed reports. Available in Gemini Advanced, it’s a potential competitor to OpenAI’s o1.

– Jules: Tailored for developers, Jules integrates with GitHub workflows, offering coding assistance and direct interaction with repositories. Currently in beta, it’s set for wider availability in 2025.

– Game Navigator: While still in development, this agent aims to assist players in navigating gaming worlds. Google is collaborating with developers like Supercell to test Gemini’s capabilities in virtual environments. No release date has been announced, but the project highlights the potential for AI to enhance interactions in virtual settings and beyond.

What Lies Ahead?

Project Mariner and its sibling agents represent a major evolution in AI-driven interactions with the web. While businesses may appreciate that users still visually engage with their sites, the decreased need for direct user involvement poses challenges for engagement and monetization.

Google DeepMind’s Veo 2 Brings Cinematic AI to the Forefront

Google DeepMind is back at it—seriously, do they ever sleep? This week, they’ve also unveiled Veo 2, the next-generation video-generating AI model, taking direct aim at OpenAI’s Sora. Veo 2 promises longer clips, sharper visuals, and more realistic motion.

Key features of Veo 2

Veo 2 generates videos from text prompts or combinations of text and images, excelling at diverse styles like Pixar-esque animation and photorealistic imagery. Building on the original Veo, the upgraded model delivers:

– 4K Resolution: A sharp leap from previous limits, offering up to 4096 x 2160 pixels.

– Extended Duration: Over two minutes of video, surpassing Sora’s 20-second limit.

– Enhanced Realism: Improved motion, fluid dynamics, and lighting effects, including cinematic shadows and nuanced human expressions.

– Advanced Camera Controls: Dynamic positioning and movement are used to create cinematic scenes.

Currently, Veo 2 is available exclusively via Google’s experimental VideoFX tool, where videos are capped at 720p and eight seconds. Access requires a waitlist, but DeepMind plans to roll out Veo 2 on the Vertex AI platform as the model scales.

Eli Collins, VP of product at DeepMind, admits, “There’s room to improve in generating intricate details, fast and complex motions, and pushing the boundaries of realism.”

Collaboration and Ethics

DeepMind has worked closely with creatives, including Donald Glover and The Weeknd, to refine Veo 2, integrating artistic feedback into the model. However, questions remain about training ethics, as the model uses data sources like YouTube without an opt-out mechanism for creators.

DeepMind uses SynthID, a watermarking technology embedded in video frames, to mitigate misuse. While helpful, SynthID isn’t foolproof against tampering.

xAI Upgrades Grok Chatbot and Introduces the Grok Button

xAI, Elon Musk’s AI venture, continues to roll out updates despite ongoing legal battles with OpenAI. On Friday night, the company announced an upgraded version of its Grok 2 chatbot and introduced new features aimed at enhancing the user experience on X, formerly known as Twitter.

What’s New with Grok?

The enhanced Grok chatbot boasts:

– Three-Times Faster Performance: xAI claims significant speed improvements, allowing quicker responses.

– Better Accuracy: Upgrades in instruction-following capabilities.

– Improved Multilingual Support: Broader language compatibility to cater to diverse user bases.

Free users are limited to 10 questions every two hours, while X Premium and Premium+ subscribers benefit from higher usage limits.

The Grok Button

A new Grok button has been added to X, designed to offer users quick insights by providing relevant context, explaining real-time events, and facilitating deeper engagement with trending topics.

Updates for Enterprises

xAI is also making strides with its enterprise API:

– New Grok Models: Enhanced efficiency and multilingual features.

– Price Reductions: Input token costs drop from $5 to $2 per million tokens, and output token costs are reduced from $15 to $10 per million tokens.

In the coming weeks, xAI plans to add Aurora, its image-generation model, to the API. Already available as part of Grok’s chatbot experience, Aurora is described as a “largely unfiltered” image AI and represents xAI’s push into the generative visual space.

Meta Brings AI, Live Translations, and Shazam to Smart Glasses

Meta has unveiled exciting updates for its Ray-Ban Meta Smart Glasses, introducing live AI, live translations, and Shazam integration. These features mark another step forward in integrating AI-powered functionality into everyday wearables.

What’s New?

1. Live AI:

– Allows users to interact naturally with Meta’s AI assistant as it observes their surroundings.

– Example: Ask for recipe suggestions based on items you’re looking at in a grocery store.

– Available exclusively to members of Meta’s Early Access Program.

– Runtime: Approximately 30 minutes per charge.

2. Live Translations:

– Provides real-time translation between English and Spanish, French, or Italian.

– Translations can be heard through the glasses or displayed as text on your phone.

– Requires pre-downloading language pairs and specifying languages for conversations.

– Also limited to Early Access Program members.

2. Shazam Integration:

– Identify songs by prompting Meta’s AI when you hear music.

– Available for all users in the US and Canada.

To try these new capabilities, users must ensure their smart glasses are updated to v11 software and the Meta View app is on version v196. For live AI and translations, joining the Early Access Program is required.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.