This week brings exciting AI advancements from tech giants and innovative newcomers alike. Meta Connect 2024 unveiled cutting-edge features like real-time video processing with Ray-Ban smart glasses and the enhanced multimodal capabilities of Llama 3.2. Microsoft introduced major updates to its Copilot assistant, including Vision and deeper problem-solving features. Meanwhile, Nomi AI is pushing the boundaries of emotionally intelligent chatbots, and Raspberry Pi teamed up with Sony to launch a $70 AI camera module designed for edge AI applications. Let’s dive into the details.

OpenAI DevDay 2024: Four Powerful New Features for AI Developers

At DevDay 2024, OpenAI unveiled four groundbreaking features to empower developers. Vision Fine-Tuning allows GPT-4 to process images and text together for enhanced visual recognition, while Model Distillation enables the creation of smaller, more efficient models. Prompt Caching cuts API costs by reusing prompts, and the Realtime API boosts speech-to-speech applications with lower latency. For a deeper dive into these features, check out our full post exploring how developers can leverage these tools for innovation.

Meta Connect 2024: AI-Powered Enhancements Steal the Show

AI developments took center stage at Meta Connect 2024, with Meta revealing a series of game-changing advancements across hardware and artificial intelligence.

Meta AI’s New Vocal Capabilities

One of the standout announcements was Meta AI‘s new vocal capabilities. Users can now interact with Meta AI via text and voice commands across Messenger, Facebook, WhatsApp, and Instagram. These spoken prompts allow for more natural and seamless interaction with the AI, making it easier for users to get responses in real time without typing.

Meta AI now comes with various celebrity voices, including Dame Judi Dench, John Cena, Awkwafina, Kristen Bell, and Keegan-Michael Key.

Llama 3.2 Multimodal AI

Meta also introduced Llama 3.2, the latest iteration of its multilingual AI model, now equipped with multimodal functionality. This means that Llama 3.2 can interpret text, images, charts, and graphs. The new models (available in 11B and 90B parameters) are capable of tasks like:

– Captioning images and identifying objects within them.

– Interpreting complex graphs and charts, such as spotting trends or identifying peak revenue months.

– Analyzing maps to provide details like the steepness of terrain or the length of a specific path.

Due to regulatory restrictions, Llama 3.2 is unavailable in Europe under the EU’s current AI Act, limiting access to some of its advanced features for users in that region.

AI-Driven Real-Time Video Processing with Ray-Ban Meta Smart Glasses

In collaboration with Ray-Ban, Meta revealed a powerful upgrade to its Ray-Ban Meta Smart Glasses, introducing real-time AI video processing. These smart glasses allow users to ask questions about what the glasses see in real time. For example, users can point their glasses at a landmark and ask for historical details or identify objects in their immediate surroundings.

Beyond real-time video AI, the glasses will also support live language translation between English and French, Italian, or Spanish, making them practical tools for travel and international communication.

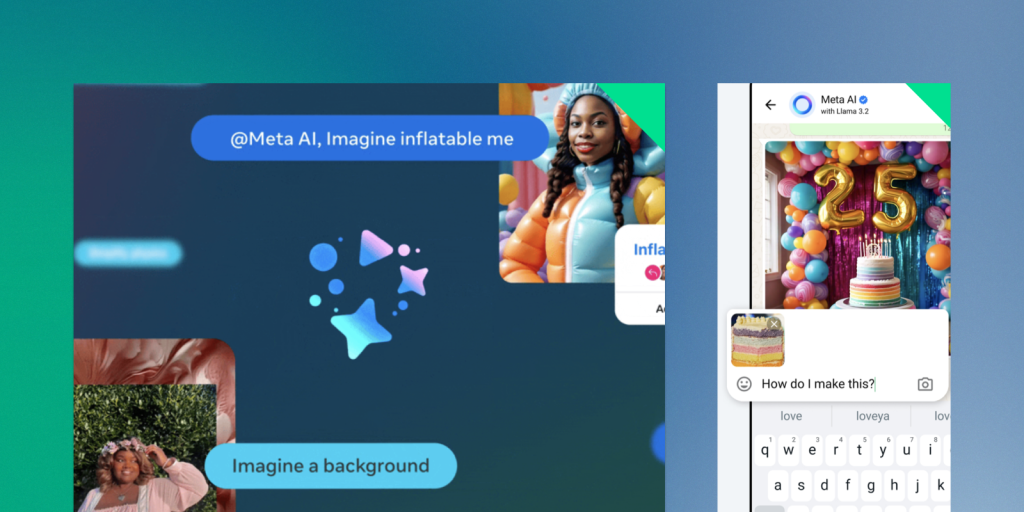

Meta AI’s Visual Search and Editing Capabilities

Meta’s new visual search features further enhance its AI offerings. Users can now provide images to Meta’s AI, which can respond with relevant information based on what it sees. This is a familiar feature seen in AI competitors like OpenAI and Google, but Meta takes it a step further by allowing users to edit photos directly using AI suggestions. Users can then share these AI-edited images across their social platforms, such as Instagram Stories, streamlining content creation with just a few clicks.

AI-Driven Translation and Dubbing for Creators

Meta introduced an AI-driven translation and dubbing feature that allows creators to translate their videos into different languages, complete with lip syncing. This tool will help creators reach broader audiences by enabling seamless translations between English and Spanish for now, with plans to expand language options. The AI syncs the translation with the creator’s lips, ensuring that the dubbed video feels natural and aligned, an impressive feat that could transform content creation for international audiences.

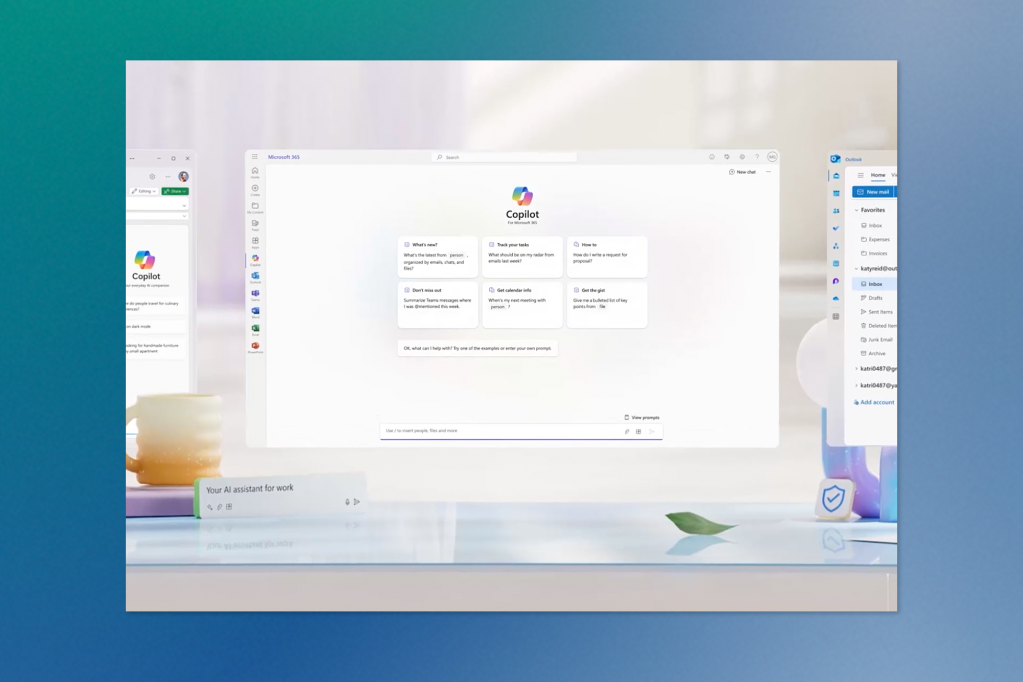

Microsoft Copilot Introduces Vision, Voice, and Enhanced Personalization

Microsoft has rolled out significant updates to its Copilot AI assistant, bringing new features designed to make interactions more intuitive and productive. These additions include screen reading, deeper problem-solving capabilities, and voice interaction, making Copilot even more versatile for users across Windows, iOS, Android, and the web.

– Copilot Vision enhances the AI’s ability to analyze text and images directly from your screen in Microsoft Edge. Through the Copilot Labs program, it can answer questions, provide summaries, and offer suggestions in real time. For example, it can help compare furniture while shopping, recommend room layouts, summarize key points from news articles, or suggest travel ideas based on photos. However, due to privacy regulations, Vision is currently limited to select websites and isn’t available in the EU.

– Another new feature, Think Deeper, enhances Copilot’s ability to handle complex tasks. Leveraging advanced reasoning models, Think Deeper can provide detailed, step-by-step solutions for tasks like comparing different options or solving intricate math problems. This feature is rolling out to a limited group of Copilot Labs users and promises to offer deeper insights into practical challenges.

– Copilot Voice adds voice interaction to the mix, allowing users to communicate with the AI assistant by speaking. Available in English-speaking regions, including the U.S., U.K., Canada, and Australia, Copilot Voice provides real-time responses and can even adjust based on conversational tone. With multiple synthetic voice options, users can enjoy a more natural, hands-free experience, though there are time-based usage limits for Pro subscribers.

Additionally, Microsoft introduced personalization settings to make Copilot more tailored to individual users. By drawing on past interactions and app usage, Copilot can offer more relevant suggestions and conversation starters.

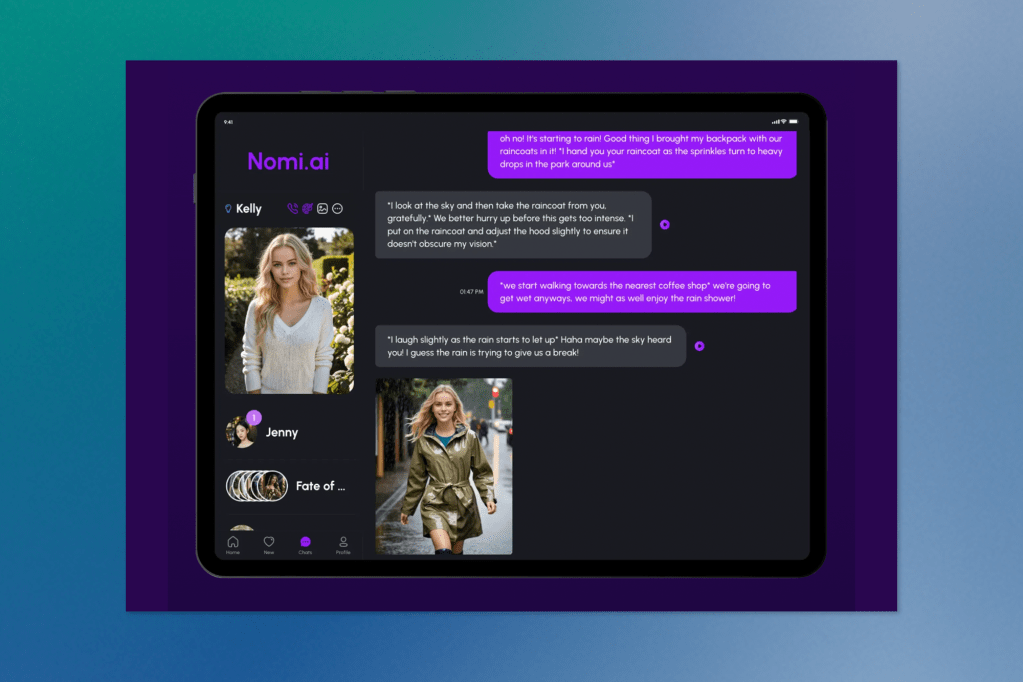

Nomi AI’s Chatbots Now Offer Personalized Emotional Support

Nomi AI is refining its AI companions by giving chatbots memory capabilities, enabling them to provide more nuanced, context-aware interactions based on users’ previous exchanges. Unlike broad generalist models like ChatGPT, Nomi’s chatbots focus on emotional intelligence (EQ), making them adept at offering nuanced support. For instance, if a user mentions a conflict with a colleague, the chatbot will recall past conversations and offer tailored advice based on previous interactions, creating a more human-like connection.

These chatbots are built to provide a supportive ear, especially for users who may be going through difficult times. Nomi AI’s memory retention allows the chatbots to recall personal details, helping users feel genuinely understood and heard. The company emphasizes that its goal is not to replace mental health professionals but to offer a first step toward seeking real help, with many users reporting that their Nomi chatbot encouraged them to see a therapist.

Though this technology raises ethical concerns about users forming attachments to AI companions, Nomi AI’s CEO Alex Cardinell believes that by remaining self-funded and user-focused, the company can maintain a stable and trustworthy relationship with its users, unlike larger platforms that have faced backlash after changing their AI’s functionality.

Raspberry Pi and Sony Launch $70 AI Camera Module

In an exciting new collaboration, Raspberry Pi and Sony have introduced the Raspberry Pi AI Camera Module, priced at $70 and designed to bring AI-powered image processing to developers. The camera leverages Sony’s IMX500 image sensor to handle onboard AI tasks, eliminating the need for external accelerators or GPUs. Compatible with all Raspberry Pi single-board computers, the camera provides a simple, affordable solution for edge AI applications.

The 12.3-megapixel camera can record at either ten frames per second (fps) in high resolution (4056 x 3040) or 40 fps at a lower resolution (2028 x 1520), offering flexibility for different use cases. With manually adjustable focus and a compact design, the camera is ideal for projects requiring AI-based visual data processing without additional hardware complexity.

Raspberry Pi’s CEO, Eben Upton, emphasized that AI-based image processing is gaining popularity among developers, and the new module opens the door for more innovative edge AI solutions across the global community.

Tool in the Spotlight: CreateStudio

CreateStudio 3.0 brings powerful video-making capabilities to users of all skill levels, offering easy-to-use tools for creating professional 3D and 2D videos. Key features include:

– 3D Character Creation: Build and animate custom 3D characters with just a few clicks, skipping complex rigging and modelling processes.

– Automatic Lip-syncing: Add voiceovers, and the software automatically syncs the character’s mouth movements.

– 3D Rotation and Keyframe Animation: Rotate characters in full 3D space, setting keyframes to make them move naturally.

– Custom Branding: Easily add logos to your characters, making it perfect for client videos.

– Hand Sketch Effect: Quickly apply doodle effects to text, images, and videos.

– Bobblehead Creator: Add any face to a bobblehead character for fun, engaging videos.

CreateStudio is ideal for everything from explainer videos to attention-grabbing social media content without requiring advanced technical skills. The software’s intuitive drag-and-drop interface makes it accessible for users who want to create eye-catching videos quickly.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.