AI This Week: Key Developments from AWS, Adobe, and OpenAI

April 25, 2024

Welcome to this week’s roundup of cutting-edge advancements in artificial intelligence. From cloud-based AI model management to high-definition video upscaling and smart wearable technology, major players like Amazon, Adobe, Ray-Ban, and OpenAI are pushing the boundaries of what’s possible with AI. In this post, we’ll dive into the latest developments that enhance how businesses and consumers interact with AI technologies and set new standards for security, usability, and creative potential. Join us as we explore these innovations and their implications for the future of AI in our daily lives and workspaces.

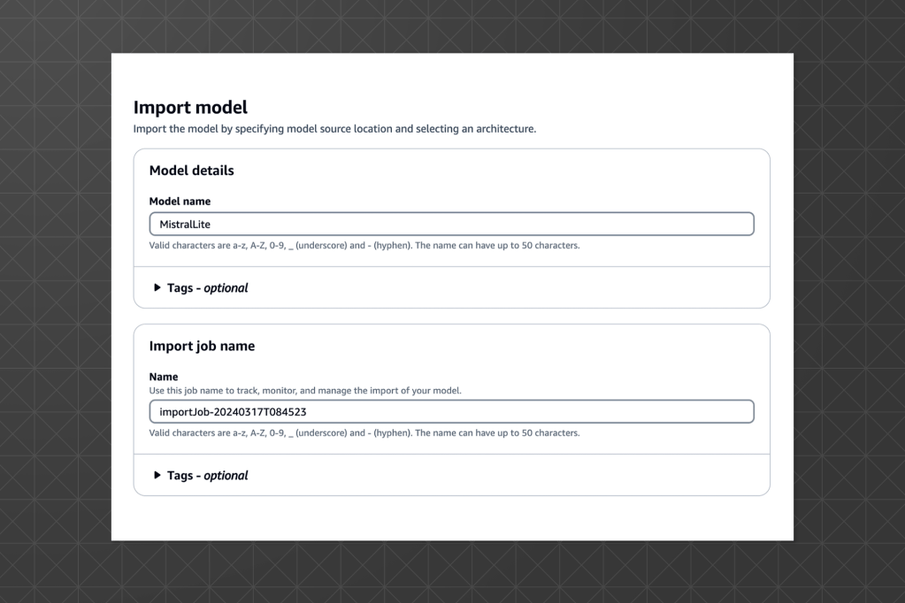

AWS Launches Custom Model Import for Generative AI

Amazon Web Services (AWS) introduced a significant enhancement to its cloud services with the “Custom Model Import” launch in its Bedrock suite. This new feature allows companies to host and fine-tune their proprietary generative AI models, facilitating easier integration and management within AWS’s robust cloud infrastructure. AWS aims to simplify AI deployment for businesses, providing a competitive edge with features like Guardrails and Model Evaluation, designed to ensure safe and effective model outputs.

Key features

Seamless Integration: Businesses can now import their custom AI models into AWS as fully managed APIs.

Enhanced Tools: The suite offers tools for expanding model knowledge, fine-tuning, and adding safeguards against biases.

Innovations: AWS has also upgraded its Titan Image Generator, which is now generally available. This enables more creative image generation from text descriptions.

AWS hints at expanding into video generation, reflecting growing interest in multimedia AI applications.

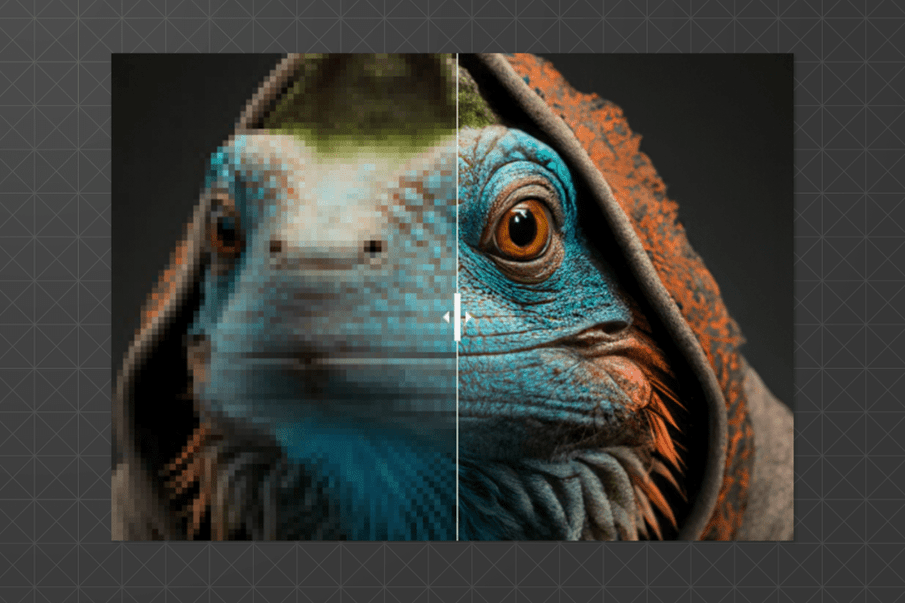

Adobe Unveils VideoGigaGAN for High-Quality Video Upscaling

Adobe Research has made a notable advancement in video processing technology with the development of VideoGigaGAN, a new generative AI model capable of upscaling blurry videos to much higher resolutions. This model enhances video quality up to eight times the original resolution without introducing common artifacts like flickering.

VideoGigaGAN applies generative adversarial networks (GANs) to video, achieving sharper and more detailed upscaling than traditional video super-resolution (VSR) methods. Unlike other GAN implementations that struggle with video, VideoGigaGAN minimizes flickering and other visual distortions, providing a more stable and clear output.

Adobe’s demonstrations showcase the AI’s ability to significantly enhance video realism, adding fine details such as skin textures and eyebrow hairs that maintain consistency through motion.

Currently, VideoGigaGAN remains a research project, with no confirmed plans for integration into Adobe’s consumer-facing products like Premiere Pro. However, its development underscores Adobe’s ongoing commitment to pioneering digital media enhancement technology.

Ray-Ban Meta Smart Glasses Embrace Multimodal AI

Ray-Ban Meta Smart Glasses, known for their sleek design and multimedia capabilities, have recently been upgraded to include multimodal AI, enhancing their functionality significantly. This AI integration allows the glasses to process and respond to various inputs, such as photos, audio, and text, greatly expanding their usability in everyday scenarios.

AI Capabilities Enhanced

Versatile Commands: Users can now interact with the glasses using commands like “Hey Meta, look and…” followed by specific requests such as identifying plants or translating signs.

Practical Use Cases: Whether identifying a car model on the street or recognizing different types of plants, the AI provides useful, albeit sometimes quirky, insights based on visual inputs.

Real-Time Processing: The AI communicates with the cloud to deliver responses directly into the user’s ears, minimizing the need to use a smartphone for similar queries.

Despite some limitations in accuracy and the need for user adaptation, these smart glasses offer a glimpse into the future of integrated wearable tech, where your everyday eyewear can assist with more than just vision.

OpenAI Enhances Enterprise Features to Counter Meta’s Llama 3

OpenAI has responded to Meta’s Llama 3’s competitive rise by rolling out new enterprise-grade features for its GPT-4 Turbo and other models. These enhancements focus on security, administrative control, and cost efficiency, reinforcing OpenAI’s leadership in the generative AI market.

Key Upgrades

– The introduction of Private Link bolstered security measures, including native multi-factor authentication and enhanced encryption protocols.

– The Assistants API has been upgraded to handle up to 10,000 files per assistant, with real-time streaming for faster response times and better file management.

– New features like token usage controls and the Projects feature help manage costs and improve administrative oversight.

Despite Meta’s advancements, OpenAI’s updates aim to provide a secure, robust, and user-friendly environment for enterprise clients, facilitating easier integration and broader adoption across industries.

These strategic enhancements by OpenAI highlight its commitment to maintaining a competitive edge and meeting the evolving needs of enterprise clients in the AI landscape.

Keep ahead of the curve – join our community today!

Follow us for the latest discoveries, innovations, and discussions that shape the world of artificial intelligence.